How to Deliver Analytics to Enterprise Customers

Business Intelligence

Dec 29, 2025

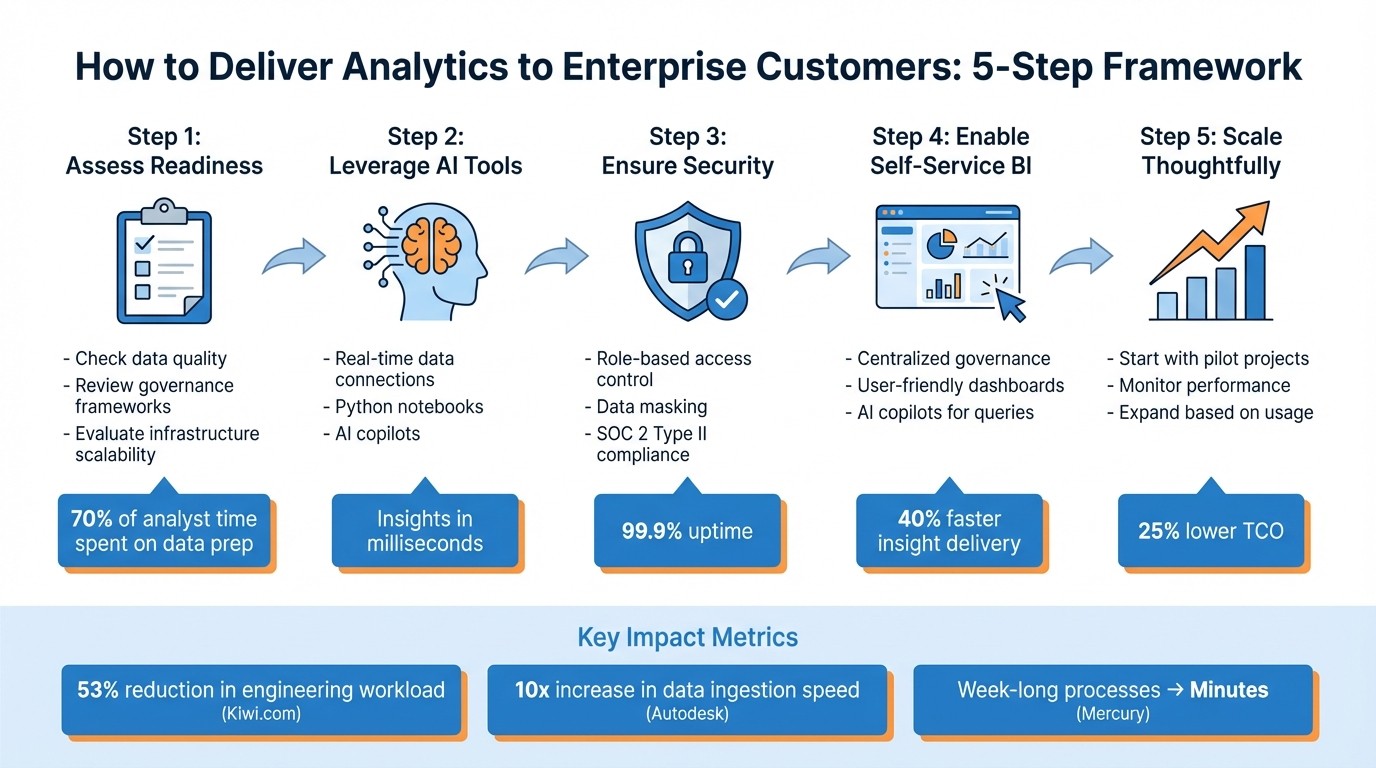

Guide to building scalable, secure AI analytics for enterprises: assess readiness, connect live data, enable self-service BI, and scale with governance.

Enterprise customers require analytics systems that handle large data volumes, ensure security, and provide fast, actionable insights. Traditional systems often fail due to slow batch processing, poor governance, and inefficient data preparation - where analysts spend 70% of their time, delaying decisions and impacting revenue. AI-driven platforms are changing this landscape, delivering insights in milliseconds and reducing costs by 25%.

Here’s how to successfully deliver analytics to enterprise customers:

Assess Readiness: Check data quality, governance frameworks, and infrastructure scalability to avoid implementation failures.

Leverage AI Tools: Use real-time data connections, Python notebooks, and AI copilots for faster, more accurate insights.

Ensure Security: Implement role-based access, data masking, and compliance measures like SOC 2 Type II certification.

Enable Self-Service BI benefits and challenges: Combine centralized governance with user-friendly dashboards and AI copilots to empower teams.

Scale Thoughtfully: Start with pilot projects, monitor performance, and expand based on usage patterns.

5-Step Framework for Delivering Enterprise Analytics Solutions

#4 Delivering Insights: From Data to Decisions – Step-by-Step | 12-step data analytics roadmap

Evaluating Enterprise Readiness for Analytics

Before diving into an analytics platform implementation, it’s crucial to determine whether your organization is ready to support scalable analytics. This step ensures your data quality, governance frameworks, and infrastructure are up to the task of handling AI-driven analytics. Skipping this evaluation could lead to failed implementations and wasted resources.

The readiness assessment focuses on two key areas: data quality and governance and infrastructure scalability. Both need to meet enterprise standards to ensure your analytics platform delivers reliable insights. Organizations that take the time to evaluate their readiness often avoid costly setbacks and achieve faster results. This process naturally involves a close look at the quality of your data and the strength of your technical infrastructure.

Checking Data Quality and Governance Requirements

Data quality is the backbone of any successful analytics initiative. If your data is incomplete, inconsistent, or inaccurate, even the most sophisticated AI tools can’t produce reliable results. Ash Rai, Technical Product Manager at FindAnomaly, sums it up perfectly: "Bad data + pretty visualization = expensive mistakes."

Start by evaluating your data for accuracy, completeness, consistency, and timeliness. Catalog your existing data assets and their structure. It’s worth noting that 80-90% of business data is unstructured, which means many organizations face significant hurdles in preparing their data for analytics.

Data governance goes beyond just documentation - it’s an operational framework that requires clear accountability. Assign roles like Data Owners, who ensure domain accuracy; Data Stewards, who oversee daily quality management; and Data Custodians, who handle technical implementation. Without these roles in place, maintaining data quality becomes nearly impossible.

Tools like Querio’s semantic layer can simplify governance by centralizing business definitions, joins, and glossaries. This ensures consistent metrics and terminology across the organization. Querio also automates critical security measures, such as Role-Based Access Control (RBAC), dynamic data masking, and row-level security policies, protecting sensitive data without adding manual overhead. For optimal performance, semantic views should be limited to 50-100 columns to avoid latency issues.

Consider Kiwi.com’s success: by consolidating thousands of fragmented data assets into 58 discoverable data products, they reduced their central engineering workload by 53% and boosted data-user satisfaction by 20%. This highlights how strong governance frameworks can enhance efficiency and user experience, setting the stage for scalable AI analytics.

Reviewing Current Infrastructure and Scalability

Data quality is only part of the equation - your technical infrastructure must also support fast, live analytics. Real-time analytics requires live connections to major data warehouses like Snowflake, BigQuery, and Postgres. High-latency copies or batch processing simply won’t cut it.

Evaluate your infrastructure’s flexibility. Can it integrate seamlessly across various data systems, models, and interfaces? Scalable systems separate data preparation (ETL/ELT) from consumption layers (reporting and AI queries). This separation ensures that background processes don’t slow down interactive performance, which is critical when users expect results in milliseconds.

Assess your infrastructure under both peak and average workloads. Measure peak concurrent capacity unit (CU) usage during high-demand periods and steady-state usage during normal operations. For mission-critical workloads, size your capacity based on peak 30-second usage to avoid hitting limits during spikes.

Workload Tier | Criticality | Capacity Strategy |

|---|---|---|

Tier 1 | Mission-Critical | Dedicated large capacity; proactive scaling; surge protection enabled |

Tier 2 | Business Operational | Shared medium-sized capacity; consolidation of compatible workloads |

Tier 3 | Ad hoc / Non-critical | Smaller shared capacity; cost efficiency prioritized over performance |

Take Autodesk’s example: by re-architecting their analytics platform with Snowflake, they achieved a 10x increase in data ingestion speed and reduced platform support staff by two-thirds. Such transformations are only possible when scalability needs are thoroughly assessed in advance.

Modern infrastructure must also handle multimodal data - structured tables, text, images, video, and even vector embeddings for AI. Containerized designs using Kubernetes allow for workspace-level isolation and multi-tenant environments, ensuring both security and performance. A detailed infrastructure review lays the groundwork for a scalable, AI-ready analytics platform.

Setting Up Scalable AI-Driven Analytics Platforms

Once your organization is ready to embrace AI-driven analytics, the next step is implementing a platform that can handle large-scale operations without sacrificing speed or security. A well-structured platform with live data connections, AI-powered tools, and strong security measures forms the backbone of a reliable system. This setup ensures you avoid pitfalls like unclear objectives or poor data quality.

The process revolves around three key areas: connecting to live data sources, leveraging AI notebooks for advanced analysis, and adhering to enterprise-level security standards. Organizations that excel in these areas have reported up to 40% faster insight delivery and 25% lower Total Cost of Ownership compared to traditional BI platforms. As the Datahub Analytics Team aptly points out:

"A low TCO might look good on paper, but if your analysts still spend 70% of their time preparing data instead of analyzing it, your organization is saving money but losing opportunity."

Below, we’ll break down how to establish live data connections, analyze data with AI tools, and secure your platform effectively.

Connecting to Live Data Sources

A scalable analytics platform starts with direct data integration, allowing you to connect to your data warehouse without relying on exported files or redundant copies. For example, Querio connects directly to Snowflake, BigQuery, and Postgres using encrypted, read-only credentials. This ensures your data remains secure in its original location while being instantly available for analysis.

By eliminating unnecessary data replication, this approach reduces latency and minimizes security risks. Enterprises typically use VPNs, IP allowlists, or Private Links to securely connect on-premises systems to cloud-based data. Implementing least-privilege access is crucial - assign roles with only the permissions they need. For instance, a ROLE_INGEST for data pipelines can help reduce your system's exposure to potential threats. Platforms like BigQuery automatically encrypt data both at rest and in transit, but it’s still wise to scrub or mask sensitive data during ingestion to avoid storing personally identifiable information (PII) in analytical warehouses.

Using AI Python Notebooks for Advanced Analysis

Once live data connectivity is in place, the next step is enabling advanced analysis with AI-powered notebooks. Querio’s Python notebooks allow analysts to run complex models directly within the platform, eliminating the need for external tools. To optimize performance, size your system based on peak 30-second usage and colocate compute resources with storage to reduce latency.

One major benefit of this setup is the separation of heavy background processes, like model training, from interactive queries. This prevents resource contention and keeps the platform running smoothly. Start with smaller computing units and scale up as you identify usage trends over time.

Meeting Security and Compliance Standards

For enterprises, meeting stringent security and compliance standards is non-negotiable. Platforms like Querio comply with SOC 2 Type II standards and offer 99.9% uptime, ensuring reliability for mission-critical workloads.

Security best practices include using IAM (Identity and Access Management), row- and column-level controls, and VPC Service Controls. To prevent PII exposure and comply with regulations like GDPR or the EU AI Act, implement an LLM gateway from the beginning. This gateway can filter out sensitive data and enforce toxicity filters.

Data residency requirements often dictate where your infrastructure operates. For instance, to comply with regional standards like the EU Data Boundary, ensure that both your tenant and compute resources are located within the required geographic area. Additionally, detailed audit logs that track user and system events are essential for providing a compliance trail during security reviews.

Building Self-Service Business Intelligence

Self-service BI gives business users the ability to answer their own questions quickly by combining centralized data governance with easy-to-use tools designed for non-technical users. This approach - described as "discipline at the core, flexibility at the edge" - relies on strong governance while enabling independent data exploration.

When executed well, this model delivers real results. While over 80% of companies are exploring AI, only 15% succeed in blending generative AI with their proprietary data. The difference? Governance. By maintaining a certified semantic layer and allowing users to freely explore within that framework, organizations achieve better outcomes. As Tableau explains:

"Modern, self-service business intelligence has the potential to drive enterprise transformations by empowering people to ask and answer their own questions with trusted, secure data."

– Tableau

The key lies in balancing three critical elements: automated executive dashboards, AI copilots for simplifying complex queries, and governance frameworks that ensure data accuracy and compliance. This setup reduces the workload on data teams while maintaining control and precision across the organization, extending the AI-driven analytics framework already discussed.

Building Executive Dashboards and Reports

Executives need quick access to real-time KPIs without the hassle of manual report creation. Querio's dashboard tools allow data teams to build certified, auto-refreshing dashboards, eliminating the repetitive tasks of exporting data, formatting spreadsheets, and sending emails.

The magic lies in separating the data layer from the reporting layer. Data teams create and certify semantic models that define how tables connect, clarify metric definitions, and ensure calculations are accurate. These models become the "single version of the truth" that everyone can rely on. Report creators then connect directly to these models via live connections, ensuring they always work with accurate, up-to-date data.

For critical dashboards, performance is a priority. Enterprises often set benchmarks like report load times under eight seconds for up to 100 concurrent users. Querio meets these demands by connecting directly to data warehouses like Snowflake, BigQuery, or Postgres, avoiding the delays and security risks of duplicating data into separate BI tools.

Using AI Copilots to Simplify Data Queries

AI copilots take the burden of ad hoc queries off analysts, turning plain-English questions into charts and insights almost instantly. While dashboards provide a broad overview, AI copilots cater to specific, on-the-spot inquiries with ease and speed.

Querio's AI copilot leverages the same certified semantic layer that powers dashboards. For example, when a marketing manager asks, "What was our customer acquisition cost last quarter by channel?", the AI uses pre-defined business rules to deliver results. This approach speeds up insight delivery by 40%, compared to traditional workflows.

To make AI copilots effective at scale, granular access controls are essential. Data teams can set read-only permissions and define which data subsets each user or department can access, safeguarding sensitive information while supporting exploration. As Jennifer Leidich, Co-Founder & CEO of Mercury, shares:

"What used to be a week-long process now takes minutes."

– Jennifer Leidich, Mercury

For enterprises, a zone-based strategy can help manage AI adoption. Zone 1 handles low-risk, personal queries with basic logging. Zone 2 covers departmental reports that need human review and template approval. Zone 3 manages high-stakes or regulatory outputs, complete with audit trails and formal approvals.

Maintaining Data Team Oversight

Even with trusted dashboards and AI tools in place, maintaining oversight is essential for long-term data integrity. By using the governance framework described earlier, data teams can monitor and validate every query and update, ensuring smooth operations as self-service usage grows.

A content endorsement system plays a key role here. Data teams mark semantic models as "Certified" to indicate they've been checked for quality and compliance. Users can see these labels in the catalog, ensuring they work with trusted data. "Promoted" labels, on the other hand, highlight community-driven content that, while not fully certified, is still useful for exploratory work.

Activity logs track every query, dashboard view, and data access event. These logs are invaluable for compliance reviews and help data teams understand how resources are being used. If a certified model is updated, lineage and impact analysis tools show which downstream reports will be affected, preventing disruptions.

This system allows data teams to focus on improving the larger data architecture instead of handling endless one-off requests. As Smartbridge puts it:

"Governance is not meant to stifle creativity. Makers and developers need the freedom to experiment, but in a framework that ensures secure, compliant, and cost-effective adoption."

– Smartbridge

Monitoring and Scaling Analytics Delivery

Setting up self-service tools is just the beginning. The real challenge is keeping analytics systems running smoothly as usage grows and business needs evolve. Think of analytics systems like software systems - they require constant monitoring and fine-tuning. As Tristan Handy, Founder & CEO of dbt Labs, puts it:

"Once you deploy, you aren't testing code anymore, you're testing systems - complex systems made up of users, code, environment, infrastructure, and a point in time."

The goal is to strike a balance between quickly delivering insights and maintaining system stability. This requires teams to keep a close eye on performance, gather feedback, and scale operations in a thoughtful manner. These practices build on the foundational principles of scalable and secure analytics.

Starting with Pilot Projects

Pilot projects are a smart way to test analytics delivery on a smaller scale before rolling out solutions organization-wide. Focus on areas where analytics can have an immediate impact.

Classify pilot projects by their importance to the business. For example:

Tier 1: Mission-critical workloads that demand dedicated resources, near-perfect availability (99%), and proactive scaling.

Tier 2: Key business functions that share resources and require moderate capacity.

Tier 3: Non-critical or ad-hoc workloads, where cost efficiency is prioritized over speed.

Keep pilots small and run them in short cycles to minimize risk and speed up development. Automated rollback mechanisms are essential - if something goes wrong, you can fix it quickly without disrupting users. This approach complements earlier strategies for integrating live data and maintaining strong governance.

Creating Feedback Loops for Improvement

Feedback loops are essential for turning raw data into actionable improvements. Track metrics like Time to Detect (TTD) and Time to Resolve (TTR) using detailed activity logs. These insights help identify bottlenecks and prioritize feature enhancements.

Introduce chargeback systems to monitor analytics usage by department or team. Transparent cost tracking not only highlights the value of analytics but also encourages more efficient use of resources. Additionally, conducting regular audits of decisions driven by AI can reveal where automation works best and where human input is still necessary.

Once pilots are successful and feedback mechanisms are in place, the next step is to expand the platform's capabilities using Querio's AI tools.

Expanding with Querio's AI Tools

Querio’s architecture is designed for seamless expansion across teams and departments, without requiring a complete overhaul of your tech stack. Its AI Python notebooks let data teams dive deeper into analysis while using the same governed data that powers dashboards and AI copilots. This ensures consistency while enabling advanced use cases.

To maintain performance, separate compute resources for heavy tasks - like Spark ETL or AI model training - from those used for interactive queries. This prevents slowdowns during intensive processing, keeping interactive queries fast and responsive.

As teams gain expertise, consider shifting from a centralized Center of Excellence (CoE) to a federated model. In this setup, departments take ownership of their data processing while relying on a shared IT-managed infrastructure. This strikes a balance between agility and strong governance.

Scale resources based on actual peak usage. Keep in mind that increasing service levels (e.g., from 99% to 99.9% uptime) requires significantly more resources, so scale carefully based on real business needs. These strategies ensure that the secure and governed environment established earlier remains intact.

Querio also extends embedded self-service analytics to external users, like customers or partners, without duplicating infrastructure. The same governance framework, semantic layer, and AI tools that support internal teams can be embedded into customer-facing applications. This creates opportunities for new revenue streams while maintaining control over data access and quality.

Conclusion: Delivering Analytics to Enterprise Customers

Summary of Main Strategies

Enterprise analytics thrives when built on a foundation of strong data governance, scalable systems, user-friendly tools, and continuous monitoring. Start by evaluating data quality and existing systems, then connect to live data sources while implementing robust security measures and compliance frameworks, such as SOC 2 Type II certification.

Self-service tools, like AI copilots that convert natural language into precise queries, play a key role in streamlining decision-making and reducing the strain on data teams. However, self-service doesn’t mean a lack of oversight. Governance remains essential, with data teams using semantic layers, access controls, and routine audits to ensure accuracy and security. As the Datahub Analytics Team aptly puts it:

"BI success today is not about how much you spend, but how fast you learn."

These practices not only accelerate insights but also cut costs compared to traditional methods.

Scaling should be approached carefully, starting with pilot projects and expanding based on actual usage patterns. Tiered resource allocation ensures that critical tasks maintain top performance as adoption grows. This cohesive strategy transforms solid infrastructure and governance into a competitive edge for enterprise analytics.

Why Querio Works for Enterprises

Querio is built with these principles in mind, offering a platform tailored for enterprise needs. By combining live connectivity, governed AI, and embedded analytics, Querio eliminates the need for data movement or complex ETL workflows. It connects directly to platforms like Snowflake, BigQuery, and Postgres with read-only access, ensuring data remains secure and intact while delivering instant insights. Its AI-powered Python notebooks enable advanced analysis on the same governed data used for dashboards and copilots.

The results speak for themselves. In 2025, Jennifer Leidich from Mercury highlighted a shift from processes that once took a week to delivering results in minutes. Similarly, Guilia Acchioni Mena, Co-Founder at Zim, reported saving 7–10 hours per week immediately after adopting Querio. These time savings underscore Querio's emphasis on Time-to-Insight over Total Cost of Ownership - a critical advantage in today’s fast-paced business environment. With SOC 2 Type II certification, precise access controls, and privacy-focused AI, Querio meets the highest enterprise security standards. Its embedded analytics tools and capabilities further extend a governed, seamless experience to both internal teams and external partners.

FAQs

How do AI tools improve the speed and accuracy of enterprise analytics?

AI tools simplify enterprise analytics by automating tasks like data preparation, identifying patterns, and using advanced models to deliver real-time insights businesses can act on. This not only speeds up the data analysis process but also enhances the accuracy of predictions, enabling companies to make quicker and more informed decisions.

With AI, businesses can address tough challenges such as merging vast data sets, maintaining data accuracy, and scaling analytics to keep up with changing demands. The outcome is a smoother decision-making process that leads to improved results across the organization.

What are the key steps to evaluate if an organization is ready for scalable analytics?

Evaluating an organization’s ability to scale analytics requires a close look at its data, technology, and people. Start by examining the data foundation. Is the data accurate, well-organized, and properly integrated? It’s also crucial to have strong security and compliance policies in place to protect sensitive information.

Next, assess the analytics infrastructure. A scalable system should be cloud-ready, modular, and able to handle increasing demands without breaking a sweat. This ensures the technology can grow alongside the organization’s needs.

Don’t overlook the people side of the equation. Teams - whether they’re data scientists or business users - need the right skills and resources to embrace AI-powered tools. Providing proper training and support is key to fostering adoption and maximizing value.

Lastly, tie analytics efforts to clear business objectives. Goals like speeding up decision-making or reducing time-to-insight help keep initiatives aligned with broader strategic priorities.

By auditing data quality, evaluating infrastructure, ensuring teams have the necessary skills, and setting measurable goals, organizations can pinpoint areas for improvement and make smarter investments to scale their analytics capabilities.

Why is a strong data governance framework essential for successful self-service BI?

A well-structured data governance framework is essential for making self-service BI both reliable and secure. By establishing clear roles, policies, and quality benchmarks, governance ensures that everyone - from analysts to business leaders - can confidently rely on the data they use. This approach minimizes errors, safeguards sensitive information, and frees up IT teams to focus on strategic priorities rather than constantly fixing data-related issues.

When governance is seamlessly woven into self-service BI, it empowers users to explore and analyze data independently while staying compliant with security protocols. The result? Quicker insights, better adoption rates, and fewer operational slowdowns, as repetitive data-cleaning tasks and mistakes are significantly reduced. In essence, strong governance strikes the perfect balance between flexibility and maintaining high data standards, fostering trust and efficiency across the business.