Building a Modern Data Layer for Embedded Analytics

Business Intelligence

Jan 1, 2026

Build a secure, governed data layer that connects live to cloud warehouses, enforces consistent metrics, and uses AI to automate transformations for real-time embedded analytics.

Embedded analytics demands fast, consistent, and real-time data. Traditional systems, relying on batch processing and complex pipelines, often fail to meet these needs. A modern data layer solves these issues by connecting directly to warehouse-native data analysis tools like Snowflake and BigQuery, ensuring instant access to live, secure data. Key components include:

Live Data Connections: Direct read-only access eliminates delays and ensures accuracy.

Semantic Governance Layer: Centralized metric definitions maintain consistency across tools.

AI-Powered Transformation: Automates SQL/Python queries, making data accessible to all users.

This approach simplifies workflows, reduces inefficiencies, and delivers real-time insights without compromising security or scalability.

Scaling embedded analytics: Deliver reliable data with the dbt Semantic Layer

Core Components of a Modern Data Layer

To build a modern data layer that addresses the common pitfalls of traditional analytics - like outdated data and inconsistent metrics - you need three essential components: live connectivity, a semantic governance layer, and AI-driven transformation tools. Each plays a key role in creating a system that's fast, reliable, and easy to manage.

Live Data Connections to Warehouses

Gone are the days of exporting or copying data. By connecting directly to data warehouses like Snowflake, BigQuery, and Postgres, Querio ensures data remains secure and up-to-date. These connections rely on encrypted, read-only access, enabling instant queries without risking data integrity.

This setup eliminates the delays and security concerns associated with data replication. Plus, with read-only credentials, AI tools can safely explore your data without the possibility of accidental edits or deletions.

Semantic Layer for Data Governance

The semantic layer acts as a bridge between your database's technical structure and the business concepts your team uses daily. With Querio, data teams define relationships, metrics, and business rules once, ensuring consistency across all dashboards and reports. Any updates to metric definitions automatically apply across every tool and application, solving the problem of departments calculating the same KPI in different ways.

"Moving metric definitions out of the BI layer and into the modeling layer allows data teams to feel confident that different business units are working from the same metric definitions, regardless of their tool of choice." - dbt Labs

This layer also streamlines security. Querio leverages Snowflake's "owner's rights" model, so users only need access to the semantic view - not the raw tables. Built-in masking policies and row-level access controls ensure sensitive data stays protected, all without the headache of managing complex permissions.

AI-Powered Tools for Data Transformation

Querio’s AI-powered notebooks take the pain out of writing SQL and Python workflows. Instead of crafting queries manually, users can simply ask questions in plain language. The AI then generates the appropriate code, using the rules and definitions established in your semantic layer.

This not only cuts query turnaround times from days to minutes but also makes data exploration more accessible to non-technical users. By understanding your business rules, joins, and metrics, the AI ensures every query aligns with governance standards, reducing errors and boosting efficiency.

Next, discover how these components come together to create a scalable, modern data layer with Querio.

Steps to Build a Scalable Data Layer with Querio

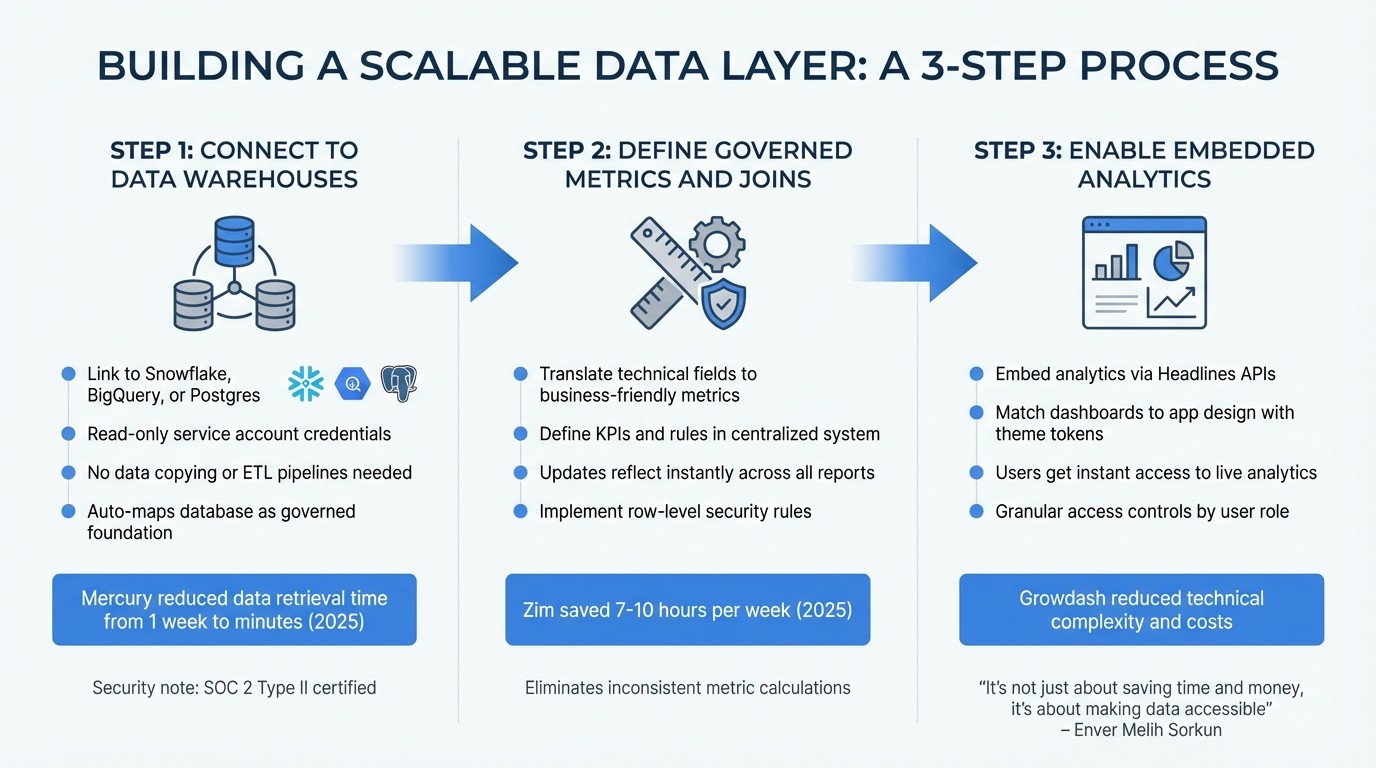

3 Steps to Build a Scalable Data Layer with Querio

Creating a scalable data layer doesn’t have to be a drawn-out process filled with complicated middleware. With Querio, you can set up a production-ready system in just three simple steps. This approach connects your data warehouses, standardizes metrics, and brings analytics straight to your end-users.

Step 1: Connect to Data Warehouses

Start by linking Querio to your data warehouse - whether you’re using Snowflake, BigQuery, or Postgres. Querio only needs read-only service account credentials, so your analytics can safely explore the data without risking accidental changes or deletions. This eliminates the need for data copying and ETL pipelines.

Once connected, Querio automatically maps your database, creating a governed data foundation that serves as your source of truth for both AI agents and business users. For example, in 2025, Mercury's Co-Founder & CEO Jennifer Leidich implemented this setup and slashed their data retrieval and visualization time from one week to just minutes. Before you get started, ensure your governance team reviews Querio’s SOC 2 Type II certification to meet enterprise security standards. This connection forms the backbone for defining your business metrics in the next step.

Step 2: Define Governed Metrics and Joins

With your data foundation in place, it’s time to build the business context. This step involves translating technical database fields into business-friendly metrics that your teams can easily understand and use.

Define KPIs and set rules in a centralized system. Any updates made here reflect instantly across all reports, solving the all-too-common issue of teams calculating metrics like "Active Users" differently. For instance, in 2025, Zim's Co-Founder Guilia Acchioni Mena reported saving 7–10 hours per week by establishing this unified context. This is also the stage to implement row-level security rules, ensuring sensitive data is filtered based on user roles before it even reaches a dashboard.

Step 3: Enable Embedded Analytics

Once your governed metrics are defined, you can seamlessly embed analytics into your application using a modern architecture. Querio’s Headlines APIs and theme tokens allow you to match dashboards to your app’s design, making the analytics feel like a natural part of your platform. Users gain instant access to live analytics, including charts, tables, and AI-generated insights.

Granular controls ensure users only see data they’re authorized to access. In 2025, Growdash’s Co-founder & CTO Enver Melih Sorkun used this approach to give their team real-time data access while cutting down on the technical complexity and costs tied to traditional BI tools. As Sorkun explained:

"It's not just about saving time and money, it's about making data accessible."

Best Practices for Governance, Security, and Scalability

Setting up your data layer is just the first step. To keep it secure, well-governed, and efficient as your user base expands, you need to make deliberate design choices from the very beginning.

Ensuring Data Security

Security should be baked in from the start. Achieving SOC 2 Type II validation demonstrates enterprise-grade protection for your production data.

One effective approach is implementing row-level security (RLS) directly within your SQL queries. By filtering data based on user-specific attributes - such as WHERE org_id = 123 - you ensure users can only access the rows they’re permitted to see. Always enforce filtering on the server side. Use signed JWTs with a defined TTL to securely convey user identity and roles, avoiding any risk of client-side tampering.

For sensitive data like PII or financial records, field-level encryption safeguards information during transit and while stored. In multi-tenant setups, keeping detailed access logs is critical. These logs should capture every data access event, including the user role and applied filters, which is essential for compliance with frameworks like SOC 2 or HIPAA. As Casey Ciniello, Senior Product Manager at Infragistics, puts it:

"In regulated industries, analytics only succeeds when governance is embedded."

This solid security framework lays the groundwork for scaling your data environment effectively.

Optimizing Performance at Scale

As your data grows, maintaining performance becomes just as important as security. A decoupled storage and compute architecture is key - it lets you scale processing power independently from storage, adapting to demand. Pair columnar storage formats like Apache Parquet with partitioning to minimize the data scanned during queries.

For distributed workloads, spread processing across multiple nodes to enable parallel read and write operations. In streaming scenarios, align shards or partitions with your throughput needs, and use Spot Instances with automatic scaling to keep costs manageable during high-traffic periods. Tools like Amazon CloudWatch can help you monitor shard-level metrics and identify performance bottlenecks before they affect users.

To maximize throughput for streaming data, aggregate records before transmission, even if it introduces slight latency. Standardize schema evolution controls to flag incompatible changes early, preventing disruptions in downstream pipelines. Aim for ≥ 90% automated test coverage and a ≥ 99.5% data-quality SLA on critical data attributes to maintain reliability as you scale.

Conclusion

A modern data layer lays the groundwork for delivering consistent, actionable insights through embedded analytics. By connecting directly to cloud data warehouses, implementing a governed semantic layer, and using AI-powered automation, you can overcome the inconsistencies and delays often found in traditional BI systems.

The move from batch processing to real-time access is a game-changer. Real-time updates allow users to respond swiftly to fraud, adjust pricing strategies, and make critical decisions with confidence. At the same time, row-level security ensures that this speed doesn’t compromise safety, maintaining scalable performance even as data volumes grow.

Querio simplifies this entire process by combining these capabilities into a single platform. It offers AI-driven natural language querying, live connections to data warehouses, and enterprise-level governance. By defining joins and metrics just once, all users - from financial analysts to dashboard viewers - can rely on a single, trusted source of truth. Plus, with no data duplication or hidden query fees, consistency and governance are guaranteed across the board.

What sets successful organizations apart isn’t just having the most advanced tools. It’s about building an automated, governed data foundation that ensures the right information reaches the right person at the right time. Start with impactful use cases, treat your semantic layer as a core product, and prioritize security from the outset.

When your data layer is designed for real-time decisions and strong governance, embedded analytics transforms from a technical hurdle into a powerful competitive advantage.

FAQs

How does a semantic governance layer help maintain consistent data across analytics tools?

A semantic governance layer plays a crucial role in maintaining data consistency by serving as a centralized hub for defining and standardizing business concepts, metrics, and hierarchies. For example, when terms like revenue or customer churn are defined in one place, every analytics tool, dashboard, or model pulls from the same source. This eliminates the risk of conflicting interpretations or mismatched calculations.

Beyond definitions, this layer connects logical terms to the underlying data tables. This means that any updates to the source data are automatically reflected without breaking reports or introducing errors. By enforcing rules like naming conventions, version control, and access permissions, it prevents issues commonly caused by ad-hoc SQL queries or manual calculations. The end result? Analytics you can trust - consistent, repeatable, and reliable - empowering faster and more accurate decision-making.

How does AI-powered data transformation enhance embedded analytics?

AI-driven data transformation takes the hassle out of preparing data for analytics by automating tasks such as cleaning, enriching, and unifying raw datasets. This automation not only speeds up the process but also ensures that embedded analytics can provide real-time insights directly within applications. The result? Fewer delays and a significant reduction in errors that often come with manual data handling. Plus, it easily scales to accommodate new data sources and schema changes, eliminating the need for expensive updates.

On top of that, AI brings predictive power to analytics by identifying patterns and trends that might go unnoticed with traditional methods. This opens the door to features like churn prediction, sales forecasting, and risk detection, all seamlessly built into analytics tools. With these capabilities, users can make proactive, informed decisions based on data. By simplifying workflows and delivering insights that are ready to act on, AI-powered transformation not only cuts costs but also boosts the overall effectiveness of embedded analytics solutions.

How does Querio ensure data security while enabling real-time analytics?

Querio places a strong emphasis on data security, integrating it seamlessly into every aspect of its modern data stack. This approach ensures users can access real-time analytics without putting sensitive information at risk. Queries operate within governed contexts, enforcing role-based permissions and automatically masking or redacting sensitive data before sharing results. For added protection, data in transit is safeguarded with TLS encryption, while data at rest is stored in encrypted, tenant-isolated cloud warehouses to eliminate the risk of cross-tenant access.

To connect applications to its analytics engine, Querio uses a secure, token-based API. This setup provides instant access to up-to-date insights without exposing credentials or raw data. A semantic layer further enhances security and usability by transforming raw tables into curated business objects. This limits query surfaces and ensures only pre-approved calculations are accessible. By blending these measures with strict governance policies, Querio delivers real-time insights while adhering to top-tier security standards.