AI Analytics Trends Shaping the Future of Business

Business Intelligence

Jan 30, 2026

AI analytics transforms business with real-time insights, NLP access, autonomous agents, predictive models, and strong data governance for reliable decisions.

AI analytics is transforming how businesses work by making data accessible, actionable, and faster to process. Companies are replacing outdated tools with modern business intelligence tools that offer real-time insights, natural language queries, and predictive capabilities. This shift saves employees time, improves decision-making, and creates a competitive edge.

Key Takeaways:

Real-Time Insights: AI analytics delivers instant answers, moving away from slow, manual reporting.

Simplified Access: Non-technical users can now interact with data using natural language, eliminating the need for complex skills like SQL.

Predictive and Prescriptive Analytics: AI forecasts outcomes and recommends actions to optimize operations and meet goals.

Automation: Tasks like anomaly detection, data cleaning, and root cause analysis are automated, freeing up teams for strategic work.

Governance: Strong data governance ensures accuracy, consistency, and trust in AI-driven insights.

AI analytics tools are becoming central to business strategies, enabling faster decisions and scalable solutions while reshaping how data is managed and used.

AI Data Analyst: How AI Is Transforming Data Analytics Explained | FutureForward Ep. 26 Techcanvass

Top AI Analytics Trends Transforming Business Intelligence

Three key trends are reshaping how businesses unlock insights from their data. First, agentic analytics is taking AI from a supportive role to an autonomous one. For example, these systems can detect revenue drops, analyze their causes, and even initiate corrective actions - all without human intervention [3][2]. Instead of relying on manual analysis, AI agents now coordinate multi-step processes, cutting down on time and effort.

Automated insight discovery is another game-changer. Machine learning algorithms sift through massive datasets to identify anomalies, correlations, and trends [3]. In some enterprises, AI agents even handle root cause analysis across log files, reducing resolution times by half [3]. Multimodal AI takes it further, analyzing text, images, video, and audio to reveal hidden patterns and context [4]. When paired with repository intelligence - which understands the history and relationships within data and code - these systems provide smarter suggestions and more efficient automated solutions [4].

However, these advancements rely heavily on AI-ready data governance. Proper data labeling, synthetic data, and feature engineering are critical for ensuring accuracy and trust in AI models [1][5]. Without these foundational practices, even advanced tools can deliver inconsistent results.

Natural Language Processing for Data Access

Building on the power of autonomous analytics, natural language processing (NLP) is changing how people interact with data. With NLP, employees can ask questions in everyday language and get instant insights. By 2025, the global NLP market is expected to hit $43 billion [9]. This leap forward depends on a robust semantic layer - a centralized system of business logic and metadata - that translates conversational queries into precise database commands [7][8]. Without this layer, similar questions could yield inconsistent answers, a problem known as "metric drift."

A great example comes from TELUS, where Senior Design Specialist Adam Walker standardized key performance indicators across multiple hardware vendors using a semantic layer. This allowed consistent analysis through natural language queries [8]. Similarly, The Home Depot empowered thousands of non-technical users to explore data independently, while ensuring results remained accurate and governed [8].

"The real challenge is bridging the gap between technical teams who organize the data and business leaders who just need answers."

Dael Williamson, EMEA Field CTO at Databricks[8]

Emerging tools like the Metric Context Protocol (MCP) are also making waves. MCP connects large language models to governed, semantic-rich metadata, ensuring consistent answers across AI tools. With worker access to AI projected to rise by 50% in 2025 [6], strong governance frameworks are essential for scaling NLP capabilities while maintaining accuracy.

AI-Powered Augmented Analytics

Augmented analytics automates repetitive data tasks like cleaning, anomaly detection, and generating initial insights. This frees up data teams to focus on strategic analysis. In healthcare, for instance, Microsoft AI's Diagnostic Orchestrator achieved 85.5% accuracy in solving complex medical cases, compared to just 20% for experienced physicians [4]. AI’s ability to process large datasets allows it to spot patterns that human analysts might miss.

These systems also enforce rules, flag exceptions, and prepare data for analysis - all critical as data volumes grow and manual methods become impractical.

"The future isn't about replacing humans. It's about amplifying them."

Aparna Chennapragada, Chief Product Officer for AI Experiences at Microsoft [4]

The business intelligence market is projected to expand from $38.62 billion in 2025 to $116.25 billion by 2033, highlighting the transformative impact of this technology [7].

Predictive and Prescriptive Analytics

AI has moved beyond just revealing insights - it now forecasts outcomes and recommends actions to achieve specific goals. Predictive analytics uses historical and real-time data to anticipate events like demand fluctuations, credit risks, or equipment failures [12]. Looking ahead to 2026, prescriptive analytics will combine forecasting with autonomous decision-making, enabling businesses to act on insights without manual intervention [10][11]. This approach improves forecast accuracy, reduces inefficiencies, and scales decision-making without requiring additional staff.

For instance, companies are identifying customers willing to pay more for sustainable products and optimizing energy usage with carbon scheduling [2]. Predictive systems are also becoming integral to daily operations - tools like Copilot and Bing now answer over 50 million health-related questions each day [4]. These capabilities are especially valuable as industries face workforce shortages, such as the World Health Organization's estimate of an 11 million shortfall in health workers by 2030 [4].

Successful implementation often follows the 80/20 rule: while technology provides about 20% of the value, the remaining 80% comes from rethinking workflows to fully utilize AI’s potential [2].

How Governance Supports Scalable AI Analytics

AI analytics can deliver incredible insights, but without proper governance, those insights can easily be misinterpreted or misused. As these tools become more widespread across organizations, governance acts as the safeguard, ensuring data remains secure, accurate, and ready for informed decision-making. Without it, companies risk inconsistent metrics and poor access controls. Alarmingly, 84% of digital transformation projects fail due to issues with data quality and governance, leading to losses estimated at 12% of annual revenue [15].

The urgency for governance grows as AI adoption accelerates. While only 20% of organizations have mature systems for managing autonomous AI agents [6], 84% of those with advanced AI maturity recognize governance as critical for their success [16]. A staggering 60% to 73% of enterprise data remains untapped for strategic purposes because fragmented controls make it inaccessible [15]. Effective governance not only unlocks this data but also builds trust in its use.

"You can't achieve AI velocity without governance maturity. The technical features of your platform get you a big, fast engine; governance is the sophisticated control system that ensures the data fueling that engine is safe and accurate enough for real-world decisions."

Semantic Context Layers for Consistent Metrics

Governance plays a key role in creating consistent, organization-wide analytics. A semantic layer translates raw database schemas into user-friendly terms like "Customer", "Order", or "Churn Rate." This prevents the problem of "dashboard logic", where formulas are duplicated - and often miscalculated - across different tools and departments. When metrics like Revenue or Gross Margin are defined in a semantic layer, they remain consistent, whether accessed through Tableau, Power BI, or an AI assistant.

Lockheed Martin offers a powerful example of semantic governance in action. The aerospace giant once managed 46 separate systems for data and analytics. By partnering with IBM in 2025, they consolidated these into a single, integrated environment. The result? A 50% reduction in data and AI tools, the replacement of all 46 legacy systems, and the automation of 216 data catalog definitions. This streamlined foundation now supports their "AI Factory", enabling 10,000 engineers to build and deploy solutions [16].

Modern semantic layers, defined in code (YAML, JSON, LookML), bring governance into the software development process. They can be peer-reviewed, version-controlled, and tested, ensuring consistent enforcement of access policies like Row-Level Security (RLS) and Role-Based Access Control (RBAC). This approach eliminates the inconsistencies that arise when business logic is scattered across individual dashboards.

"A semantic layer is therefore not a nice-to-have; it's the backbone that makes multi-BI, AI, and data mesh architectures trustworthy."

Coalesce [13]

With organizations increasingly adopting multiple BI tools - such as Tableau for sales and Power BI for finance - there’s a shift toward "Universal" or "Headless" semantic layers. These layers maintain a single source of truth across platforms, avoiding vendor lock-in and supporting diverse users, from BI tools to AI agents.

Governance in Self-Service Analytics

Governance also plays a crucial role in enabling confident self-service analytics. By embedding rules and controls directly into workflows, governance allows users to access data quickly and securely without worrying about compliance. Instead of creating bottlenecks, clear guardrails encourage faster insights.

Fragmented data access is a common challenge, but companies like NTT DATA have shown how to overcome it. In 2025, they used Azure AI Foundry and Microsoft Fabric Data Agent to empower non-technical employees in HR and operations to explore enterprise data through conversational queries. This reduced time-to-market for data initiatives by 50% and gave employees intuitive, self-service access to insights [17]. The key? "Secure-by-default" tools that respect user permissions through managed identities and "on-behalf-of" authentication.

Governance can also drive meaningful outcomes in specific contexts. For example, Hillsborough Community College in Florida used SAS analytics to identify students at risk of dropping out and provided targeted resources. By governing their data for this purpose, they achieved a 109% increase in student completion rates [14]. Their governance framework protected sensitive student data while empowering staff to act effectively on insights.

"Agents are only as capable as the tools you give them - and only as trustworthy as the governance behind those tools."

Microsoft Azure [17]

The best implementations treat every self-service tool or API as a product, complete with clear input/output schemas and error handling. Additionally, they document AI models with model cards, detailing accuracy, fairness, and biases. With 77% of consumers stating they would stop doing business with a company that misuses or loses their data [15], transparent governance is not just a best practice - it’s essential for maintaining trust.

How to Implement AI Analytics Trends with Querio

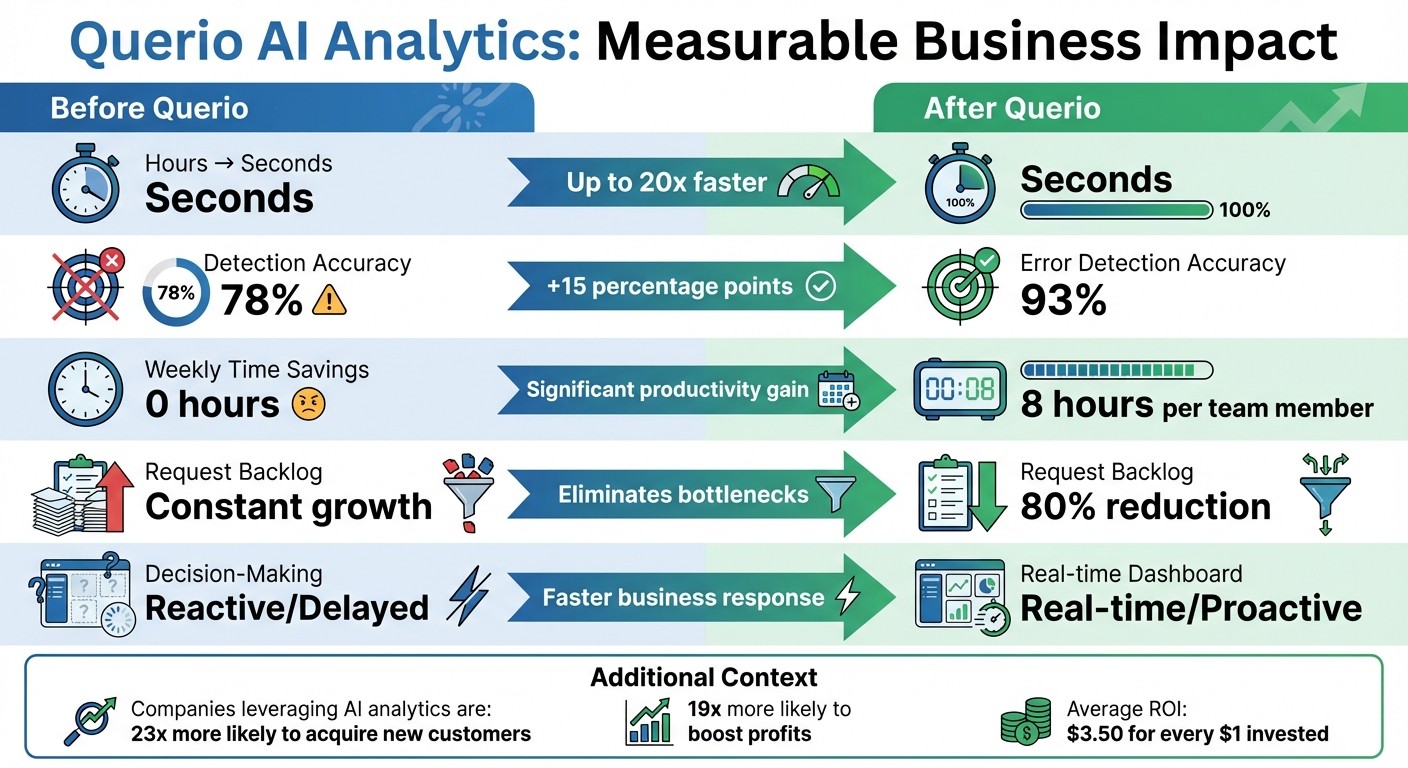

Querio AI Analytics Performance Metrics: Before and After Comparison

Implementing AI analytics effectively with Querio starts with a strong foundation in data governance. Instead of rushing to deploy advanced models on shaky infrastructure, focus on building a reliable data framework. Organizations that prioritize data quality, governance, and semantic consistency from the beginning tend to see quicker results and avoid expensive rework later on [18]. This approach lays the groundwork for a scalable, phased rollout, as outlined below.

Step-by-Step Adoption Strategy

The best way to implement Querio is through a phased approach that gradually builds capabilities. Start by defining your business metrics, table relationships, and terminology within Querio's semantic layer. This ensures consistency across teams, reducing discrepancies and speeding up the process of generating insights.

Once your semantic layer is set, introduce natural language querying tools and AI notebooks for self-service analytics. Querio's NL2SQL technology allows non-technical users to ask questions in plain English while connecting directly to live data warehouses like Snowflake, BigQuery, or Postgres. This expands access without sacrificing accuracy, as every response is backed by real SQL that data teams can review and validate.

The final phase involves scaling to dashboards and embedded analytics. By this stage, your governance framework is well-established, ensuring that insights delivered to executives or embedded in customer-facing applications remain accurate and reliable. This phased strategy aligns with expert recommendations, focusing on human-centered design to enhance decision-making while maintaining oversight of AI-driven processes [18].

Measuring Success with Querio

Each phase builds on the last, making it easier to track progress and measure success. Clear benchmarks help validate the effectiveness of your transition to AI-powered analytics. Companies using Querio have reported impressive results:

Data reporting speeds up to 20 times faster than traditional methods.

Teams save up to 8 hours per week per member by accessing insights independently.

Error detection accuracy improves from 78% to 93% with Querio's AI-powered validation.

Data teams experience an 80% reduction in request backlogs, allowing them to focus on strategic tasks instead of routine queries.

Metric | Before Querio | After Querio Adoption | Improvement |

|---|---|---|---|

Query Speed | Hours → Seconds | Seconds | Up to 20x faster |

Error Detection | 78% accuracy | 93% accuracy | +15 percentage points |

Weekly Time Savings | 0 hours | 8 hours per member | Significant productivity gain |

Request Backlog | Constant growth | 80% reduction | Eliminates bottlenecks |

Decision-Making | Reactive/Delayed | Real-time/Proactive | Faster business response |

These gains align with broader trends in AI analytics. Research shows that companies leveraging AI-powered analytics are 23 times more likely to acquire new customers and 19 times more likely to boost profits. On average, they see a return of $3.50 for every $1 invested in AI.

Conclusion: Preparing for AI-Driven Business Intelligence

Key Takeaways

AI-driven analytics isn't about replacing human decision-making - it’s about enhancing it. While 88% of organizations report regular AI use, only 39% see a meaningful impact on their bottom line [19]. The companies that succeed are those that rethink workflows, implement scalable governance structures, and focus on innovation rather than just cutting costs.

AI agents are already delivering impressive results, speeding up processes by 30%–50% and reducing low-value tasks by 25%–40% [21]. High-performing organizations are three times more likely to have leadership directly involved in AI initiatives [19]. These companies treat governance as a strategic priority, redesigning their operating models to integrate AI capabilities rather than tacking AI onto outdated systems.

Technologies like natural language interfaces, predictive analytics, and semantic governance layers are already reshaping business intelligence. The real question isn’t whether AI will transform analytics - it’s whether your organization will lead that transformation or lag behind.

What's Next for AI in Business

As organizations continue to leverage AI, the next evolution in business intelligence is already on the horizon.

Agentic AI is poised to be the next big leap. By 2027, 67% of executives expect AI agents to perform independent actions within their organizations [20]. These aren’t just basic chatbots - they’re advanced systems capable of planning, executing multi-step workflows, and making autonomous decisions within established guidelines. Already, 62% of companies are testing or scaling these capabilities [19].

The companies that will thrive in this new era are those that invest now in building the right foundations. This means creating graduated autonomy frameworks, adopting shared AI platforms with centralized governance, and training teams to work alongside AI as orchestrators rather than competitors. As BCG highlights:

"The greatest barrier to scaling agentic AI isn't technology, it's trust" [11].

Establishing trust starts with tools like Querio - platforms designed to provide AI-powered insights while ensuring transparency, consistency, and control over your analytics processes.

FAQs

How can AI analytics help businesses make better decisions?

AI analytics empowers businesses to make quicker and smarter decisions by providing real-time, precise insights in a straightforward format. With natural language queries and predictive models, AI breaks down complex data analysis, making it easier for everyone to understand - even those without technical expertise. This not only speeds up decision-making but also streamlines overall operations.

AI-driven tools bring consistency by standardizing metrics, spotting anomalies, and predicting trends. These features allow businesses to move from reacting to problems to anticipating them, improving operations and staying ahead of the competition.

Why is data governance essential for effective AI analytics?

Data governance plays a crucial role in ensuring that AI analytics produce accurate, reliable, and actionable insights. When organizations maintain clean, consistent, and secure data, they can confidently rely on AI-generated results to make informed, data-driven decisions.

A well-structured governance framework helps standardize metrics and definitions across the organization, minimizing errors and inconsistencies. For example, establishing a single, unified definition for terms like "Total Revenue" ensures everyone is working with the same data and interpretations. This alignment not only speeds up analysis but also improves its precision. On top of that, practices like implementing security protocols and version control safeguard data integrity, prevent unauthorized access, and support compliance efforts - ultimately fostering trust in AI-powered processes.

Without strong data governance, the risk of misleading AI analytics grows, leading to flawed decisions and diminishing the value of data-driven insights.

How can non-technical users easily access insights using natural language in AI analytics?

Non-technical users can tap into the power of natural language processing (NLP) in AI analytics to get insights quickly and easily - no technical know-how required. Instead of wrestling with complicated query languages, they can simply type or ask questions in plain English, like "What were our sales last quarter?" or "Which products are performing best?" The result? Clear, actionable answers delivered instantly.

NLP tools also help reduce dependence on IT teams by automating tasks such as generating reports or analyzing unstructured data like customer reviews or social media posts. These tools understand human language, transform it into queries, and provide real-time insights. This means faster decisions and better outcomes. By using NLP, businesses can encourage teamwork, give employees at all levels the tools they need, and adapt more effectively to market shifts.