Why AI Is the Future of Data Analysis

Business Intelligence

Feb 3, 2026

How AI speeds up data analysis, improves accuracy, enables natural-language queries, and scales analytics across industries with transparent warehouse-backed tools.

AI is transforming data analysis by automating tedious tasks, enabling real-time insights, and improving accuracy. Here's why it matters:

Speed: AI processes data in seconds, replacing weeks of manual work.

Accuracy: Minimizes human error and ensures consistent metrics.

Accessibility:Natural language analytics interfaces let anyone ask questions and get answers without coding.

Scalability: Handles massive datasets, like the projected 181 zettabytes of data by 2025.

Forecasting: Predictive analytics improves outcomes with 24-28% better accuracy.

AI tools are already revolutionizing industries like finance, e-commerce, and healthcare by streamlining audits, optimizing inventory, and enhancing diagnostics. Platforms like Querio ensure transparency by showing the SQL or Python behind every query, empowering businesses to trust and verify AI-driven insights. The shift to AI in data analysis is not just a trend - it’s the new standard for smarter, faster decision-making.

The Future of AI in Data (w/ Alex The Analyst) | Mavens of Data

Main Benefits of AI in Data Analysis

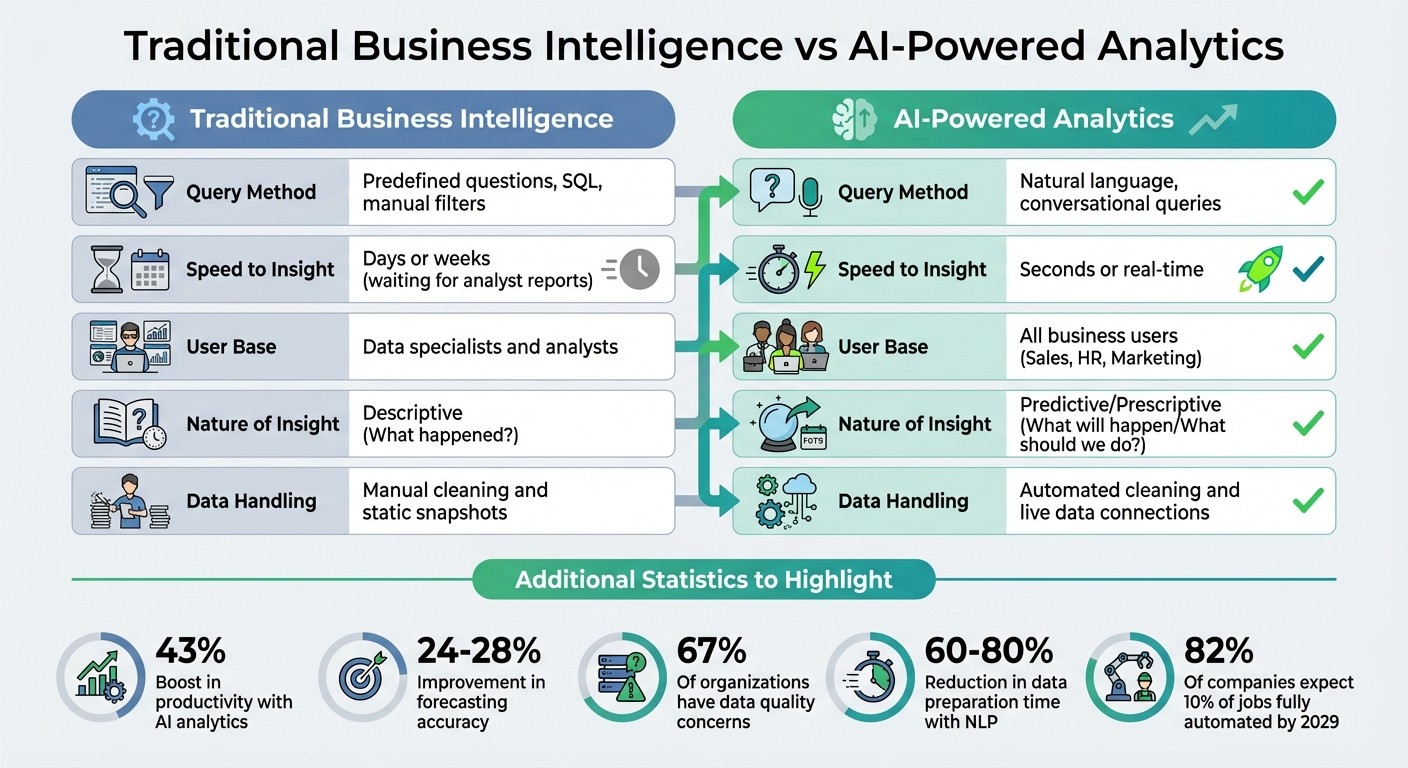

Traditional BI vs AI-Powered Analytics: Key Differences

AI is reshaping how businesses derive insights from their data. By replacing slow, manual processes with automated systems, companies are gaining an edge in three critical areas: speed, accuracy, and scalability. In fact, real-world users have reported a 43% boost in productivity and a 24–28% improvement in forecasting accuracy [2].

Faster Processing and Results

AI takes over repetitive tasks like ETL (Extract, Transform, Load), enabling businesses to get insights almost instantly. Instead of analyzing historical data in batches, AI allows near real-time monitoring, so companies can respond to live data as it streams in [8]. Tools like Natural Language Processing (NLP) make it easy for users to extract insights without needing coding skills, adding agility to the process [8][1].

Automated Machine Learning (AutoML) speeds up predictive modeling by testing and refining algorithms automatically, cutting down the time needed for iterations [2]. AI also works around the clock, identifying anomalies like fraud or inventory issues as they happen [1]. As the Intuit Blog Team puts it:

"AI handles the repetitive or time-consuming steps so analysts and data scientists can put their efforts more into framing the right questions or applying judgment to the results" [2].

For a quick start, leverage AI tools for Exploratory Data Analysis (EDA) to summarize datasets and identify trends worth exploring [2]. Automate recurring reports by having AI generate updated metrics and draft simple summaries for regular updates, saving time and effort [2]. These efficiencies not only speed up processes but also enhance the precision of data operations.

Better Accuracy and Consistency

Human errors in data handling can be costly, and 67% of organizations admit they have concerns about their data quality [4]. AI-powered platforms can eliminate these issues by using a shared semantic layer - a system that ensures terms like "revenue" or "fiscal year" are defined consistently across the organization. This eliminates confusion and ensures that finance and sales teams are working with the same metrics [6][3].

AI plays a complementary role to human judgment. Acting as a "co-analyst", it handles initial data cleaning and retrieval, while experts validate and refine the results to keep insights aligned with business goals [6][2]. Mark Porter, CTO at dbt Labs, highlights the importance of this partnership:

"AI doesn't know its data better than any expert or random social media user does. The quality of data - particularly, the quality of its source and whether we can trust that source - will be key" [3].

To ensure accuracy, adopt a hybrid review process: let AI handle the first round of data cleaning and quality checks, and then have humans review the more complex or critical results [9][10]. A centralized catalog for business logic and metadata further ensures consistent and reliable outcomes tailored to your organization’s needs [6].

With improved data quality, teams can make better decisions and trust the insights at every level of the business.

Scalable Analytics for All Users

AI is breaking down barriers that once limited data analysis to technical experts. By replacing traditional methods - like SQL queries and manual filters - with natural language interfaces, AI makes data accessible to everyone. Now, sales reps, HR managers, and marketers can get insights in seconds without needing any coding knowledge.

Feature | Traditional Business Intelligence | AI-Powered Analytics |

|---|---|---|

Query Method | Predefined questions, SQL, manual filters | Natural language, conversational queries |

Speed to Insight | Days or weeks (waiting for analyst reports) | Seconds or real-time |

User Base | Data specialists and analysts | All business users (Sales, HR, Marketing) |

Nature of Insight | Descriptive (What happened?) | Predictive/Prescriptive (What will happen/What should we do?) |

Data Handling | Manual cleaning and static snapshots | Automated cleaning and live data connections |

Olga Kupriyanova, Director of AI and Data Engineering at ISG, explains how AI solves the scaling challenges of big data:

"AI is hugely beneficial for fixing some of the core challenges that have existed since the onset of big data analytics... Manually, this is an impossible task" [7].

To get started, focus on pilot projects in areas like sales or marketing, where the impact of AI analytics can be quickly demonstrated [6][2]. Use a unified governance framework to ensure that key metrics like "revenue" are defined consistently across the organization [6]. With 82% of companies expecting 10% of jobs to be fully automated by 2029 [4], the urgency to adopt scalable AI analytics is clear.

AI Methods That Are Changing Data Analysis

Three advanced AI techniques are reshaping how businesses work with data: Natural Language Processing (NLP) transforms plain English into actionable queries, predictive analytics highlights future trends before they unfold, and automated pattern discovery uncovers hidden relationships in massive datasets. Together, these methods deliver faster and smarter insights, building on AI's foundational capabilities and pushing data analysis into new territory.

Natural Language Processing for Data Queries

Natural Language Processing (NLP) bridges the gap between human language and complex data systems. Instead of writing SQL or Python, you can ask questions like, “What were our top-selling products last quarter?” The AI instantly translates that into a functional query [12][13]. What’s more, NLP retains context, so follow-up questions like, “Now break that down by region,” are seamlessly processed and executed in real time [12][14].

Natural Language Generation (NLG) takes it a step further by converting raw data into clear summaries, making trends easier to understand [14][2]. Platforms that integrate NLP reduce data preparation time by 60% to 80% [14], and teams using AI-powered analytics report a 43% boost in productivity [2].

A practical way to start is by using NLP for Exploratory Data Analysis (EDA). This allows you to quickly summarize new datasets before diving deeper [12][2]. To ensure accuracy, consider implementing a semantic layer, which acts as a centralized catalog defining key business terms like “revenue” or “active user.” This avoids confusion over terms such as “fiscal year” versus “calendar year” [6][3].

Predictive Analytics and Outlier Detection

AI doesn’t just process existing data - it predicts what’s coming next. Predictive analytics shifts the focus from “What happened?” to “What’s likely to happen?” With machine learning models, businesses can forecast outcomes with 24% to 28% greater accuracy than traditional methods [2]. This helps organizations anticipate everything from demand spikes and equipment failures to market shifts.

Kishan Chetan, Executive Vice President at Salesforce Service Cloud, explains the power of AI in this space:

"AI agents are powered by LLMs. That means that the whole interaction is far more conversational, and its reasoning goes well beyond a rule-based system" [1].

Real-time applications are already making waves. Logistics companies, for example, use streaming data to adjust for weather delays, road closures, and traffic patterns instantly [15]. Finance teams can detect unusual spending patterns before they escalate into audit problems. Unlike traditional analytics, AI doesn’t wait for queries - it proactively identifies opportunities and risks.

For businesses looking to adopt predictive analytics, starting with pilot projects in areas like sales forecasting or supply chain monitoring can quickly showcase its value [6][2]. However, keep humans in the loop - AI should act as a “co-analyst,” handling repetitive tasks while leaving strategic decisions to human judgment [6][2].

Automated Pattern Discovery and Insights

Automated pattern discovery is another game-changer, helping businesses uncover hidden relationships in their data. Using techniques like k-means clustering and association rule mining, AI identifies patterns without requiring manual input [16][17]. Advanced models like deep learning and neural networks can even detect complex dependencies across diverse data types - text, audio, video, and numerical data - that might take analysts months to uncover [16][17][18].

This capability is especially useful for exploring data without a specific hypothesis. By scanning vast datasets, AI surfaces unexpected correlations that traditional analysis might miss. It also excels at integrating fragmented data systems, automating the tedious task of hunting for insights [16].

Olga Kupriyanova, Director of AI and Data Engineering at ISG, highlights how AI accelerates this process:

"The ability to leverage code generation to help with data manipulation tasks can rapidly improve the time spent 'munging' data so it can be analytically useful" [7].

Whether you’re exploring new datasets or searching for overlooked insights, automated pattern discovery can reveal opportunities that might otherwise stay hidden.

How Industries Use AI for Data Analysis

The AI data analytics revolution is making waves across industries like finance, e-commerce, and healthcare. By automating repetitive tasks, identifying issues before they escalate, and uncovering insights that would take humans weeks to find, AI is reshaping how businesses operate. The results? Faster audits, smarter inventory management, and better patient care, to name a few.

Finance: Streamlined Audits and Smarter Reporting

Gone are the days when finance teams relied solely on spreadsheets and manual checks. AI is now tackling complex tasks like automating the accounting close process and drafting internal risk reports without constant human oversight [19]. It’s also being used for invoice-to-contract compliance, where AI systems analyze contracts and invoices to ensure terms like tiered pricing and rebates are correctly applied - catching errors that often slip through manual reviews [19].

AI tools for data analysis save finance professionals about 30% of their time by automating tasks like budget variance analysis [19]. For example, one institution used AI for cost categorization and anomaly detection, cutting costs by 10% across a multibillion-dollar budget [19]. In industries where contract compliance is critical, AI has identified value leakage equaling up to 4% of total spending [19].

Adoption is picking up speed. By 2025, 44% of CFOs are expected to use generative AI for at least five use cases, a significant jump from just 7% in 2024 [19]. Additionally, 65% of organizations plan to increase their investment in generative AI during the same period [19]. According to McKinsey & Company:

"The opportunity is real, but capturing it requires moving beyond experimentation to disciplined execution anchored in business priorities" [19].

For finance teams, the key is to start small. Instead of waiting for perfect data, they should focus on AI use cases that work with their current data while gradually improving their data foundations. Standardizing workflows is also essential - applying AI to fragmented processes can create more problems than it solves [19].

E-commerce is another sector where AI is driving big changes, particularly in inventory and customer engagement.

E-Commerce: Smarter Inventory and Customer Engagement

In e-commerce, AI is shifting businesses from reactive problem-solving to proactive planning. AI systems continuously fine-tune assortments, adjust prices, and refine promotions, eliminating the need for static weekly decision cycles [21]. These tools can also detect out-of-stock risks at specific locations and coordinate restocking or inventory rebalancing [21].

Merchants often spend 40% of their time on repetitive tasks like data consolidation and spreadsheet work. AI frees up this time, allowing them to focus on strategy and customer insights [21].

Localization is another area where AI is making a difference. AI-powered tools can generate high-quality translations while adapting content for specific dialects, cultural preferences, and local payment methods, boosting customer engagement [20]. By translating into just ten key languages, brands can reach up to 90% of their global market [11]. And the cost savings are substantial - while human translators charge around $0.20 per word, AI-assisted localization offers similar quality at about half the price [20].

McKinsey & Company highlights the advantages of AI in e-commerce:

"Decision-making no longer needs to follow static weekly cycles, since AI agents can continuously tune assortments, adjust prices, refine promotions, and deliver rich, real-time insights" [21].

However, adoption isn’t widespread yet. Seventy-one percent of merchants report limited or no impact from AI merchandising tools, often due to fragmented systems and poor data quality [21]. A phased approach works best: start by piloting AI tools in a small business unit to test their effectiveness before scaling up [21].

Meanwhile, healthcare is leveraging AI to revolutionize diagnostics and treatment.

Healthcare: Smarter Diagnostics and Personalized Care

Healthcare providers are using AI to predict equipment failures and develop personalized treatment plans that improve patient outcomes. Remote Patient Monitoring (RPM) systems, powered by AI and IoT devices, track vital signs like ECG, heart rate, blood pressure, and glucose in real time. These systems provide predictive alerts, enabling early intervention for chronic conditions [23]. The market for AI in remote patient monitoring is expected to hit $4.3 billion by 2027 [23].

AI is also transforming diagnostics. Between 2019 and 2024, Mayo Clinic researchers used AI and convolutional neural networks to analyze ECG data from over 180,000 patients. Their AI system achieved an accuracy of 79.4% in detecting atrial fibrillation, even when patients were in normal sinus rhythm [24]. Deep neural networks analyzing ECG abnormalities achieved an average diagnostic performance score of 0.97 (AUC), outperforming cardiologists, who averaged 0.78 [24].

In surgery, AI-assisted robotic systems are reducing operation times by 25% and cutting complications by 30%. For instance, AI-guided pedicle screw fixation has halved complication rates from 12.2% to 6.1% [22]. AI-driven radiotherapy schedules for brain tumor patients have improved tumor control rates by 25% [27].

Dr. Maha Farhat from Harvard Medical School underscores AI’s potential in healthcare:

"AI can help us learn new approaches to treatment and diagnostic testing for some cases that can reduce uncertainty in medicine" [25].

AI is also easing administrative burdens. Taiwan’s A+Nurse System, introduced in 2024, automates routine nursing tasks and integrates real-time medical data into workflows, cutting documentation time by 21–30% [26]. Similarly, Johnson & Johnson MedTech, in partnership with Nvidia, uses AI to analyze surgical videos in real time and automate post-operative documentation, improving surgical training and quality metrics [26].

For healthcare providers, ensuring AI tools integrate with existing Electronic Health Records (EHR) systems is crucial. This makes data actionable and helps clinicians interpret AI-generated insights to enhance decision-making [24].

Querio: AI-Powered Analytics You Can Trust

AI is reshaping how industries approach data analysis, but not every platform delivers the level of accuracy and transparency businesses need. Querio bridges the gap by connecting directly to your data warehouse and converting plain English queries into executable SQL or Python code. Each query is grounded in data, fully transparent, and built on unified business logic. This is further supported by a shared context layer, ensuring consistent metrics across your organization.

AI Agents That Generate Accurate, Transparent Queries

Querio's AI agents take natural language questions and translate them into executable code, displaying the exact SQL or Python being used. This transparency makes it easy to verify the accuracy of queries and understand the logic behind the results - critical when these insights influence key business decisions.

By combining retrieval-augmented generation (RAG) with a semantic layer, Querio ensures queries are not only accurate but also traceable back to their source data. Unlike opaque "black-box" AI systems, Querio puts users in control, giving them the final say on what gets executed in their data warehouse. This approach addresses a growing industry realization: while generative AI is powerful, it often requires rigorous quality checks to ensure reliability [29].

Shared Context Layer for Consistent Metrics

Querio's semantic layer acts as a single source of truth for business definitions and metrics. Data teams define key elements - like table joins, metrics, and glossary terms - once, and these definitions automatically apply across all analytics workflows. Whether you're querying an AI agent, creating a dashboard, or using a Python notebook, the definitions remain consistent.

The platform simplifies technical database terms like amt_ttl_pre_dsc, translating them into user-friendly names like "Gross Revenue." This makes data more accessible to non-technical users. Plus, when a metric definition is updated, the changes instantly reflect across all dashboards, reports, and AI-generated insights, eliminating "logic drift." This centralized approach can reduce data request backlogs by as much as 80% [28], freeing up engineering teams to focus on more complex tasks.

Feature | Traditional BI Logic | Querio Shared Context Layer |

|---|---|---|

Logic Storage | Locked within specific BI tools | Universal and independent of tools |

Consistency | High risk of "logic drift" | Single source of truth for all metrics |

AI Accuracy | Prone to errors and hallucinations | Grounded in structured logic |

Maintenance | Manual updates across tools | "Define once" with automatic updates |

Security | Tool-specific permissions | Inherits warehouse-level security |

By centralizing metric definitions and maintaining real-time accuracy, Querio ensures every insight is built on reliable, up-to-date data.

Direct Warehouse Connections and Embedded Dashboards

Querio connects seamlessly to live data warehouses like Snowflake, BigQuery, Amazon Redshift, and ClickHouse through secure, real-time connections. This enables real-time queries, ensuring that insights are always based on the latest data, which is essential for organizations moving toward real-time analytics.

The platform also supports embedded analytics, allowing dashboards to be integrated into customer-facing or internal applications. These embedded insights are powered by Querio's transparent AI agents and validated against the shared semantic layer, ensuring that all analytics remain accurate and aligned with organizational standards.

What's Next for AI in Data Analysis

AI analytics is advancing at a breakneck pace. By 2026, it's predicted that 40% of analytics queries will rely on natural language, and the AI agents market is expected to surge from $5.1 billion in 2021 to $47.1 billion by 2030 [31]. As AI becomes increasingly integrated into analytics, the focus is shifting toward its evolving capabilities. Let’s take a closer look at how emerging AI systems are transforming analytics through self-management, improved transparency, and the integration of diverse data formats.

Self-Managing AI Systems and Agents

The next wave of AI technology won’t just answer questions - it’ll proactively ask them. These self-refining analytics systems can autonomously tackle complex problems. For instance, when faced with a question like "Why did revenue drop?" these systems break it down into smaller, iterative sub-questions to pinpoint root causes [31]. They also adapt to data changes, investigate anomalies, and verify their findings through closed-loop pipelines [32][30].

A growing trend is the adoption of multi-agent collaboration. This involves specialized AI agents working together, each handling distinct tasks such as data quality checks, metric generation, visualization, or reporting [30][33]. This "AI team" approach is proving more efficient for managing long-term, complex analytical projects than single-purpose tools. However, the current landscape shows that over 90% of AI agents lack explicit trust and safety mechanisms [33]. To address this, businesses need platforms that prioritize transparency and auditability from the outset.

"AI data analysis is evolving from a tool you use to an analyst you collaborate with. The distinction between 'using AI for analytics' and 'AI performing analytics' is collapsing." - Ash Rai, Technical Product Manager, Anomaly AI [30]

These advancements are redefining autonomous analysis. While AI’s speed and accuracy are impressive, ensuring transparency is key to maintaining trust in these systems.

Smarter, More Transparent AI

AI is becoming more sophisticated, moving beyond basic keyword recognition to semantic reasoning. This means it can understand context better, connecting terms like "high temperature" and "febrile condition" even if the exact word "fever" isn’t mentioned [35]. Such capabilities allow AI to deliver insights that align more closely with how businesses think about their data.

Transparency is also becoming a cornerstone of AI development. Gartner predicts that by 2025, 95% of data-driven decisions will involve at least partial automation [31]. For critical decisions, it’s essential to have clear AI logic. Platforms that reveal the underlying SQL or Python code behind AI-generated insights enable human teams to audit and trust the results. Without this level of transparency, AI risks becoming a "black box", potentially undermining confidence rather than building it.

Another emerging trend is FinOps for Analytics, which reflects the growing need for cost accountability. As AI query costs rise, businesses are implementing automated cost tracking, query limits, and duration caps to manage cloud spending effectively [31]. The goal is to ensure that AI systems are not only accurate but also efficient and financially responsible.

Multi-Format Analytics and Edge Computing

AI is extending its capabilities by integrating multiple data formats and leveraging edge computing. Modern AI agents can now process structured SQL data, semi-structured JSON files, and unstructured formats like images, videos, and 3D models - all within a single workflow [30]. This multi-format approach allows businesses to combine customer feedback text, sales figures, and product images to gain a more comprehensive understanding of performance. The predictive analytics market is expected to grow from $17.49 billion in 2025 to $100.20 billion by 2034, with a compound annual growth rate of 21.40% [30].

Edge computing is playing a critical role in speeding up decision-making by processing data locally - on IoT devices, sensors, or mobile equipment - rather than relying on centralized servers. This reduces latency from hours or days to just milliseconds [30]. Such speed is vital for real-time applications in industries like manufacturing (predictive maintenance), healthcare (patient monitoring), and finance (fraud detection at the point of transaction). As 5G networks expand and AI models become lightweight enough to run on edge devices, businesses of all sizes will find data analysis more accessible and actionable [30].

"Our vision is for customers not only to see what happened, but to have a conversation with their data and receive intelligent recommendations." - Gerardo Ortiz, Head of Product and Digital Transformation, Métrica Móvil [34]

Conclusion: Using AI for Better Data Analysis

AI has reshaped how businesses handle data analysis. Tasks that once dragged on for days now wrap up in seconds. As mentioned earlier, AI-powered analytics significantly boost productivity and improve forecasting accuracy [2]. This isn't just a step forward - it's a complete overhaul of how companies operate and compete.

But it's not just about speed. AI ensures data is handled consistently, transparently, and securely. Traditional business intelligence (BI) tools often force users to choose between simplicity and control. Platforms like Querio break that mold by pairing natural language queries with auditable SQL and Python, all while keeping data securely within your warehouse.

These advancements don't just improve performance - they create a strategic edge. Companies with high data maturity are twice as likely to have the quality data needed to fully leverage AI [5]. This creates a ripple effect: better data fuels better AI, which leads to smarter decisions, which then generates even better data. The divide between companies that embrace AI-driven analytics and those that don't will only grow wider.

Looking ahead, the future belongs to teams that treat AI as a co-analyst, complementing human expertise [6][1]. AI takes care of repetitive tasks like data cleaning, pattern recognition, and initial analysis, freeing humans to focus on strategic decisions and providing business context. As Databricks aptly puts it:

"Organizations that embrace this transformation today will better position themselves to lead their industries tomorrow" [6].

Key Takeaways

AI-powered analytics is redefining traditional data analysis by combining speed, precision, and control. The shift from manual SQL to natural language queries, from static data extracts to live connections, and from scattered definitions to metrics and semantic layers marks one of the most significant transformations in business intelligence in decades.

Querio's approach exemplifies this change, blending natural language queries with audit-ready code to set a new benchmark in data analysis. Teams gain self-service analytics without sacrificing accuracy or control. Whether running quick queries, creating dashboards, or embedding analytics into customer-facing tools, a unified logic ensures consistency across all applications.

The real question isn't whether to adopt AI for data analysis - it’s how fast you can integrate it into your processes. Teams already leveraging AI analytics are enjoying measurable improvements in speed, accuracy, and decision-making. These benefits grow over time, making early adoption essential for staying competitive.

FAQs

How does AI make data analysis more accurate?

AI improves data analysis by handling massive datasets quickly and efficiently while reducing the chance of human error. It can uncover patterns and trends that might go unnoticed in manual reviews, offering sharper and more accurate insights.

Using advanced algorithms and machine learning, AI evolves over time, maintaining reliability even as data becomes more complex. This allows businesses to make smarter, data-driven decisions with greater confidence.

How does Natural Language Processing (NLP) enhance AI-driven data analytics?

Natural Language Processing (NLP) makes interacting with data as simple as having a conversation. Instead of needing technical skills like SQL, users can ask questions or provide commands in plain English. NLP translates these everyday phrases into complex queries, delivering actionable insights with ease. This shift makes data analysis more intuitive and accessible to a broader audience.

NLP also shines in handling unstructured data - think emails, social media posts, or customer reviews - by quickly transforming it into useful insights. It powers features like conversational analytics, automatic report generation, and instant visualizations. For example, you can ask, "What were last quarter's sales?" and receive an accurate, immediate response. By reducing the need for technical teams, NLP not only broadens data access but also speeds up decision-making in AI-driven analytics.

How can businesses effectively use AI to improve their data analysis?

Businesses can take their data analysis to the next level by using AI-powered platforms that directly link to live data sources. This allows for quicker and more precise insights. With features like natural language processing (NLP) and predictive analytics, these tools make data easier to understand and use - even for those without a technical background. Complex tasks become simpler, and data access becomes more inclusive.

To begin, companies should prioritize integrating AI into their workflows. This involves connecting to real-time databases, establishing strong data governance practices for trustworthy insights, and offering tailored training to ensure teams can make the most of these AI tools. Platforms like Querio make this process easier by providing smooth database connections and intuitive AI features that align with U.S. standards, such as date and currency formatting.

By treating AI as a collaborative tool, businesses can automate tedious tasks, identify actionable trends, and make quicker, smarter decisions. This shift turns traditional data analysis into a dynamic advantage in today's competitive landscape.