How AI Is Transforming Data Analytics

Business Intelligence

Jan 22, 2026

AI automates data prep, delivers real-time insights, enables natural-language querying, and boosts predictive accuracy—requiring clean data and governance.

AI has made data analytics faster, smarter, and more accessible. It automates tedious tasks like cleaning and organizing data, allowing businesses to focus on decision-making. Key advancements include:

Data Preparation: AI automates cleaning and organizing data, reducing manual effort by 40% and detecting 37% more inconsistencies.

Real-Time Insights: AI processes data instantly, cutting analysis time from weeks to seconds, enabling businesses to act faster.

Pattern Recognition: AI identifies hidden trends and relationships in large datasets, delivering insights that would take humans months to uncover.

Natural Language Processing (NLP): Users can query data in plain English, making analytics accessible to non-technical teams.

Predictive Analytics: AI refines forecasts in real-time, improving accuracy by 20–40% and boosting productivity across industries.

AI-powered analytics shifts businesses from reacting to past events to anticipating future opportunities. To succeed, companies need clean data, clear governance, and a step-by-step approach to implementation.

AI Data Analyst: How AI Is Transforming Data Analytics Explained | FutureForward Ep. 26 Techcanvass

How AI Improves Core Data Analytics Processes

The analytics process has long been slowed down by the preparation phase - the often tedious work of cleaning, organizing, and validating data before any meaningful insights can be extracted. AI is reshaping this stage entirely, automating much of the manual effort and speeding up the entire journey from raw data to actionable insights. This shift enhances processes like data cleaning, real-time analysis, and pattern recognition, making analytics not only faster but smarter.

Automating Data Preparation and Cleaning

AI systems are designed to learn data patterns, catching inconsistencies that traditional methods often overlook. While rule-based tools can flag obvious issues like duplicates or missing values, machine learning takes it further, identifying more subtle mismatches. For example, it can recognize that different abbreviations or currency formats actually represent the same value.

The results speak for themselves. AI-powered tools can detect 37% more inconsistencies compared to traditional methods and cut manual effort by as much as 40% [7]. Instead of analysts spending hours writing scripts to fix missing data, AI uses predictive imputation - analyzing patterns and relationships across datasets to intelligently fill in gaps with contextually appropriate values.

"AI agents accelerate data preparation by automatically cleaning datasets, spotting duplicate records and flagging quality issues." - Databricks [1]

Another game-changer is how AI simplifies data transformations. With natural language interfaces, users can now describe tasks in plain English - like saying, "extract currency from the price column" - and the system generates the necessary code automatically, eliminating the need for technical expertise.

Real-Time Data Processing and Insights

Once the data is clean, speed becomes the next priority. In fast-moving environments, waiting hours or days for insights can be costly. AI bridges what Databricks describes as "the gap between data availability and decision velocity" [1], enabling real-time processing and analysis as data flows in. Traditional methods relied on batch processing, but AI eliminates those delays entirely.

The difference is dramatic. AI has reduced the time needed for data analysis from weeks or months to mere minutes or seconds [2]. Companies that have adopted AI see their time-to-value accelerate ten-fold, while implementation costs drop by up to 80% [8]. This transformation allows businesses to react to events as they happen, rather than playing catch-up.

AI systems can query massive datasets directly in data warehouses, avoiding the delays of caching. They also monitor live data streams continuously, flagging anomalies or opportunities in real-time. This shift moves organizations from reactive reporting to proactive decision-making, where they can act on trends before competitors even notice them.

AI-Powered Pattern Recognition

While human analysts are great at asking specific questions, they’re limited in their ability to process millions of data points at once. AI fills this gap by analyzing huge datasets to uncover unexpected patterns. Using neural networks and deep learning, AI mimics human thought processes to find relationships in complex or unstructured data [2].

The scale advantage is clear. AI can identify correlations that would take manual analysis months to uncover. For instance, it might reveal that customer churn isn’t just linked to support tickets but also to specific combinations of product usage, billing cycles, and seasonal trends. Machine learning reduces the time to discover these insights from weeks or months to seconds [2].

Feature | Traditional Analytics | AI-Powered Analytics |

|---|---|---|

Processing Speed | Weeks/Months | Seconds [2] |

Pattern Discovery | Limited to predefined queries | Automated discovery of hidden patterns [1] |

User Access | Restricted to specialists |

For AI to deliver meaningful insights, it needs a semantic layer - a unified framework that defines business terms and context. Without this, AI might find patterns that are statistically valid but irrelevant to strategy. When configured properly, AI becomes a powerful tool for uncovering opportunities and risks hidden in the daily flood of data.

Making Analytics Accessible with Natural Language Processing

For a long time, analytics felt like a domain reserved for experts - think SQL queries, complex dashboards, and specialized BI tools. But now, natural language processing (NLP) is breaking down those barriers. It allows anyone, regardless of technical expertise, to ask questions in plain English and get quick, accurate answers.

Simplifying Data Queries for Non-Technical Users

Imagine being able to type a question like, "What was our revenue in Q3?" or "What’s the churn rate by region?" and getting an immediate response. That’s exactly what NLP makes possible. Behind the scenes, AI translates these straightforward questions into SQL or Python code, queries live data, and returns results in seconds.

Here’s where NLP-powered querying shines:

It works in plain English, no technical jargon required.

Results are delivered almost instantly, enabling dynamic, conversational exploration.

It keeps track of conversational context, something traditional dashboards simply can’t do.

To better understand how NLP compares with traditional BI tools, here’s a quick breakdown:

Feature | Traditional BI Querying | NLP-Powered Querying |

|---|---|---|

Technical Requirement | Knowledge of SQL or specific BI tools | Plain English (Natural Language) |

Turnaround Time | Days or weeks for analyst reports | Seconds for instant answers |

Flexibility | Static dashboards with preset filters | Dynamic, ad-hoc conversational exploration |

Context | Limited to the specific report view | Preserves conversational context |

Output | Manual visualization | Automated, optimal visualization generation |

One key component that makes NLP querying reliable is a well-structured semantic layer. This layer ensures all departments operate using consistent definitions of business terms - like “revenue” or “enterprise customer.” Without this shared foundation, the AI might misinterpret terms, leading to inaccurate results. When properly set up, NLP tools can even account for unique factors like company-specific metrics, fiscal calendars, or organizational roles [1][9].

This setup doesn’t just simplify querying; it lays the groundwork for unified analytics across teams.

Improving Collaboration Across Teams

NLP doesn’t just make querying easier - it also transforms how teams collaborate. By standardizing data access, it eliminates the friction caused when different departments rely on inconsistent or siloed data. With everyone - from marketing to finance to operations - using the same data, teamwork becomes seamless.

"AI enables collaboration between technical and business teams through shared semantic understanding." - Databricks [1]

Here’s a staggering statistic: 82% of enterprises face data silos that disrupt workflows, and about 68% of organizational data goes unanalyzed [10]. NLP helps bridge this gap. Instead of analytics teams drowning in repetitive data requests, business users can self-serve, freeing up data teams to focus on maintaining the semantic layer and ensuring strategic insights are accurate.

"The most powerful application of artificial intelligence in BI is collaboration. AI functions as a co-analyst that augments human analysis for faster, more contextual decision-making." - Databricks [1]

This collaborative approach works best when organizations adopt human-in-the-loop workflows. Here’s how it plays out: AI handles the heavy lifting - retrieving and cleaning data, generating initial insights, and even creating visualizations. Meanwhile, human analysts validate these insights, ensuring they align with the bigger picture. This balance allows AI to tackle repetitive tasks while human teams focus on strategic decisions and creative problem-solving. The outcome? Faster, smarter decisions and analytics that drive real action across the board.

AI in Predictive Analytics and Forecasting

AI has transformed predictive analytics by building on advancements in data preparation and real-time insights. By tapping into machine learning and deep learning, predictive analytics takes historical data and uses it to project future trends. What sets it apart is its ability to refine these predictions in real time as new data becomes available, making forecasts increasingly accurate over time[12][13][2].

In supply chain management, AI-driven forecasting has been a game changer. It reduces errors by 20–50%, lowers lost sales by up to 65%, cuts warehousing costs by 5–10%, and trims administration expenses by 25–40%[11]. These results come from AI’s ability to combine internal historical data with external sources like weather conditions, social media activity, and foot traffic. By pulling in this additional context through APIs, AI provides a more complete picture for precise forecasting[11][5]. The market for external data is also on the rise, with an expected compound annual growth rate of 58%[11].

Predicting Trends and Market Shifts

AI models bring unmatched precision to forecasting market trends and consumer behavior. They process vast, unstructured datasets that traditional tools like spreadsheets simply can’t handle[11][5]. Techniques such as time-series analysis, neural networks, and data smoothing help these models eliminate outliers and noise, allowing them to simulate various scenarios. As a result, AI-powered forecasts are 20–40% more accurate, while planning cycles speed up by 30%, leading to a 20–30% boost in finance productivity[11][2][14]. This shift from reactive to proactive analytics enables businesses to anticipate future developments rather than just analyze past events[6].

Industry Applications of Predictive Analytics

AI’s applications in predictive analytics span multiple industries:

Finance: Enhances risk assessments and stock market predictions.

Retail: Fine-tunes demand forecasting and inventory management.

Logistics: Optimizes routes and predicts demand surges.

Healthcare: Improves staffing efficiency with accurate admission forecasts.

Manufacturing: Reduces downtime through predictive maintenance.

AI also automates up to 50% of workforce management tasks, cutting costs by 10–15%[11][12][13]. However, selecting the right model is crucial. Simpler models work well for smaller datasets, while deep learning thrives on extensive historical data[11].

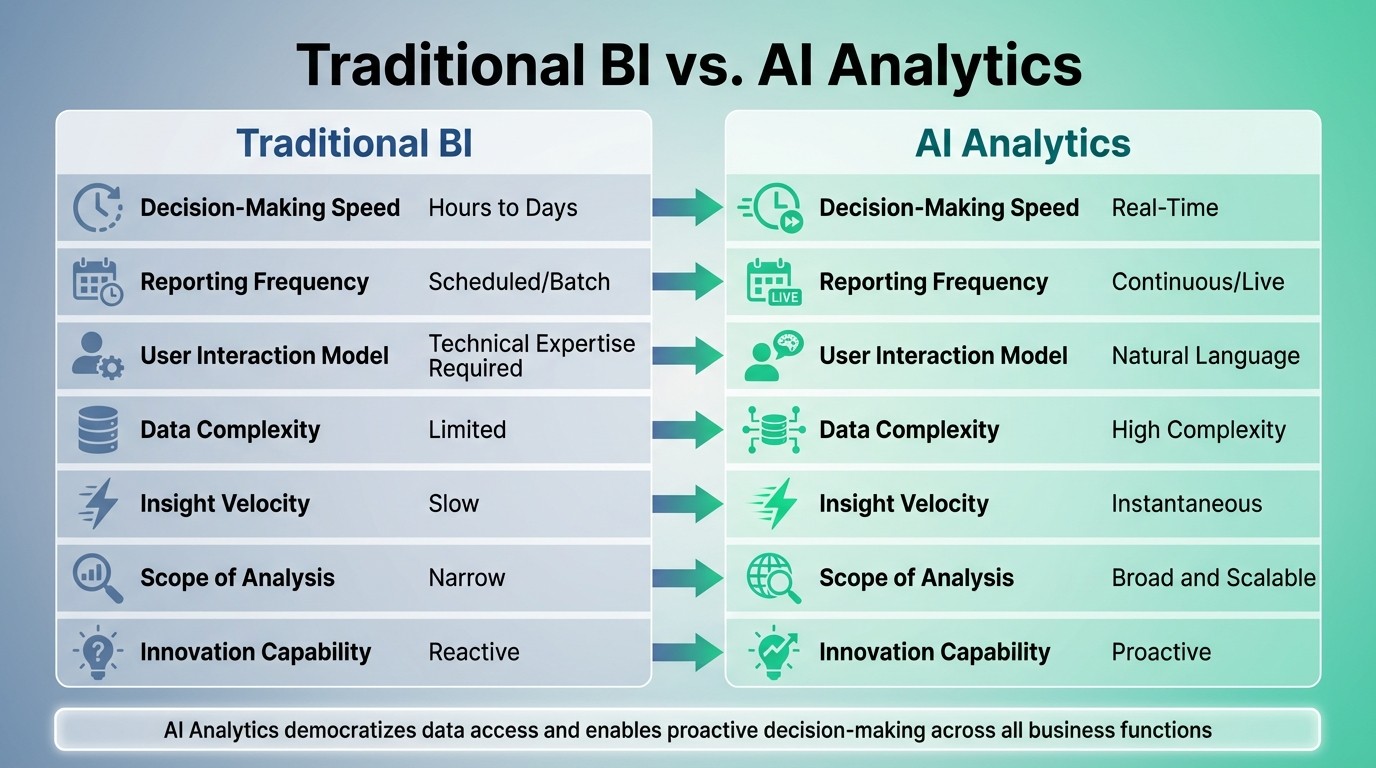

AI-Driven Decision-Making: From Reactive to Anticipatory

Traditional BI vs AI-Powered Analytics: Key Differences in Speed, Access, and Decision-Making

AI is transforming the way decisions are made, shifting the focus from reacting to past events to anticipating future ones. Traditional business intelligence (BI) has always been about analyzing what already happened, but AI analytics takes it a step further by predicting outcomes and suggesting actions. This shift allows companies to stop playing catch-up and instead stay ahead of market and operational challenges. The examples and data that follow highlight how this change delivers real-world benefits in decision-making.

The impact is measurable. Take Rio Tinto, for example: their AI-powered automated haul trucks and drilling machines boosted equipment utilization by 10%-20% [4]. Similarly, Google DeepMind’s cooling optimization slashed energy consumption by 40% [4]. In financial services, automation-driven straight-through processing has increased transaction throughput by 80% while cutting errors in half [4]. These advancements underscore how AI enhances operational efficiency. As AI-driven tools become indispensable for staying competitive, organizations can close capability gaps and respond to market demands more effectively.

AI-Generated Insights for Faster Decisions

Beyond operational improvements, AI-generated insights simplify and accelerate decision-making by pulling together data from multiple sources. AI goes beyond simple data aggregation - it adds a reasoning layer that synthesizes information, provides natural language explanations, and even offers alternative interpretations [15]. For instance, a business leader can ask, “Why did we miss our Q3 targets?” and immediately get a detailed root-cause analysis, eliminating the need to wait for a data analyst [6].

Modern AI systems often use “AI agents” that monitor data continuously, flagging anomalies and emerging trends in real time. These agents enable teams to act faster by providing interactive, conversational insights [6]. A great example is Coca-Cola Europacific Partners, which saved over $40 million in costs and avoided additional expenses by using AI-driven insights in its procurement processes [16].

"AI functions as a co-analyst that augments human analysis for faster, more contextual decision-making, combining the speed and scale of machine intelligence with the strategic judgment that only humans possess." - Databricks [1]

While AI handles repetitive tasks like data retrieval and initial analysis, keeping humans involved ensures strategic decisions are grounded in judgment and experience [1]. Organizations can start by applying AI to areas where incomplete or delayed data has been a bottleneck - like supply chain disruptions or customer churn - to speed up decision-making.

Comparison: Traditional BI vs. AI Analytics

The contrast between traditional BI and AI-powered analytics couldn’t be clearer:

Feature | Traditional BI | AI Analytics |

|---|---|---|

Decision-Making Speed | Hours to Days | Real-Time |

Reporting Frequency | Scheduled/Batch | Continuous/Live |

User Interaction Model | Technical Expertise | Natural Language |

Data Complexity | Limited | High Complexity |

Insight Velocity | Slow | Instantaneous |

Scope of Analysis | Narrow | Broad and Scalable |

Innovation Capability | Reactive | Proactive |

Traditional BI relies heavily on analysts to interpret static reports and navigate complex dashboards, making insights slow and technical. AI analytics, on the other hand, democratizes access to insights. With natural language interfaces, any business user can perform complex analysis without needing deep technical skills [1]. This shift enables organizations to predict trends and receive actionable recommendations in real time [6].

"The winners won't just be the companies with the most data, but those who can make the most of it." - Salesforce [6]

To make AI analytics work, organizations need to ensure their data is clean, connected, and well-governed. A unified semantic framework - where terms like “revenue” are consistently defined across all systems - plays a key role in delivering accurate and intuitive insights [6][3]. Starting with a single high-value project, such as optimizing a marketing campaign, can demonstrate the return on investment and build momentum for scaling AI analytics across the enterprise [1].

Maintaining Governance and Trust in AI Analytics

As AI continues to reshape analytics, establishing trust through solid governance has become a top priority. For AI analytics to be effective, they must deliver consistent and explainable results. This is especially critical as 65% of enterprises had deployed GenAI by late 2025 [18]. Without governance, the insights generated by AI can quickly turn into risks rather than assets.

The challenges here are both technical and organizational. AI often pulls data from multiple sources and automatically generates insights, which can lead to inconsistencies. For instance, one department might define an "active customer" as someone who purchased within the last 90 days, while another uses a 60-day window. AI can calculate both definitions, but the resulting discrepancies can undermine confidence in the data. According to Gartner, by 2027, 60% of organizations will fail to achieve the expected value of their AI initiatives due to weak ethical governance frameworks [3]. To avoid this, businesses must lay down clear, enforceable standards that guide AI systems in delivering reliable insights.

Governed Semantic Layers for Consistency

One effective solution is implementing a governed semantic layer, which serves as a unified source of truth for business metrics. By defining key metrics once and applying them consistently, this approach eliminates discrepancies across teams. The semantic layer links business terms directly to executable code, ensuring governance isn’t just a guideline but is actively enforced throughout the data stack [17].

"AI may be the attractive element, but data governance drives true value."

Pierre Cliche, CEO, Infostrux [17]

A good starting point is to focus on a single high-impact metric, such as "Net Revenue." Govern it end-to-end using a semantic layer and track how it enhances the accuracy of AI-generated insights [17]. Once teams experience the benefits firsthand, expanding governance to other metrics becomes far more manageable.

With semantic consistency in place, the next step is ensuring transparency in AI processes to further build trust.

Building Trust Through Inspectable AI Logic

Transparency is key to trusting AI. Users need to understand how AI arrives at its conclusions instead of blindly accepting black-box results. Inspectable AI logic provides this transparency by including the underlying SQL or Python code behind every AI-generated insight, allowing teams to verify calculations or identify errors [1]. This is especially critical in compliance-heavy industries where decisions require a clear audit trail.

Modern AI systems also reduce errors by asking clarifying questions rather than making assumptions. For example, if someone queries "last year’s sales", AI can prompt for clarification: "Do you mean fiscal year or calendar year?" [1] [17]. This interactive feature ensures the AI aligns with the user’s intent, minimizing costly misunderstandings.

"You can't trust your AI answers if you don't trust your data. Governance isn't optional anymore - it's the cost of accurate AI."

Armon Petrossian, CEO & Co-founder, Coalesce [17]

For even greater reliability, column-level lineage provides a detailed map of how data flows from its source through every transformation to the final AI output [17]. This makes it easier to identify and fix problems, whether it’s a bad data join, a miscalculation, or outdated information.

Lastly, organizations should adopt a human-in-the-loop model, where AI handles repetitive tasks like data retrieval and preliminary analysis, while humans oversee strategic insights and can override AI logic when necessary [1]. This approach strikes a balance, combining AI’s efficiency with human judgment to ensure analytics remain both fast and trustworthy. Together, these practices create a foundation for AI-powered intelligence that’s reliable, transparent, and secure.

Steps to Implement AI-Powered Analytics

To successfully implement AI-powered analytics, organizations need a structured, step-by-step approach that minimizes risk while building momentum. It's worth noting that nearly 95% of AI initiatives fail to move beyond the pilot stage due to issues like improving data accuracy and unclear responsibility assignments [20]. Companies that succeed view AI analytics as a natural evolution of their data strategy rather than as an isolated IT experiment.

The best way forward is to focus on gradual progress: identify a specific business challenge, establish a solid governance framework, and scale up from there. As highlighted by Harvard Business Review, "The benchmark shouldn't be perfection; it should be relative efficiency compared with your current ways of working" [21]. The aim is to achieve measurable improvements quickly, laying the groundwork for both immediate and long-term growth in AI analytics.

Starting with High-Impact Use Cases

The best starting point is the "No Regrets Zone" - areas where risk is low, processes are well-documented, and AI can deliver immediate results with minimal human intervention [21]. Tasks like resume screening, report summarization, or automating routine data preparation are perfect examples of this.

When selecting use cases, evaluate them based on factors like business value, urgency, cost, risk, and competitive advantage [3]. Focus on areas where inefficiencies or human biases are most apparent [4]. Here are a few real-world examples:

Stanley Black & Decker used AI-powered predictive analytics to monitor equipment performance, cutting annual maintenance costs by 10% and reducing operational downtime by nearly 25% [22].

The U.S. Treasury Department applied machine learning in 2024 to detect anomalies in financial transactions, recovering approximately $4 billion in fraud and improper payments [22].

Grupo Casas Bahia, a Brazilian retail giant, improved its search and recommendation engines with AI, driving a 28% increase in revenue per app user [22].

A great way to start is by mapping a single high-impact metric - like "Net Revenue" or "Customer Lifetime Value" - from start to finish. Governing this metric through a unified semantic layer helps establish trust and simplifies the process of expanding to other metrics. This approach also captures leadership attention, making it easier to secure funding for broader initiatives.

Scaling AI Analytics Across the Organization

Once initial projects demonstrate their value, the next step is to scale AI analytics while maintaining strong governance and reliability. This requires a shift from centralized experiments to distributed ownership across the organization.

Organizations that excel in scaling AI are nearly three times more likely to adopt a component-based development model (31% vs. 11%) [19]. This approach involves building modular AI components - such as LLM hosting, data chunking, and prompt libraries - that can be updated individually without disrupting the entire system. For instance, a European bank implemented 14 key generative AI components, enabling it to roll out 80% of its core AI use cases within just three months [19].

The governance model you choose is critical. Many companies start with a centralized Center of Excellence (CoE) to develop core capabilities and manage costs. Over time, they transition to a federated model, where individual business units handle their data processing while adhering to central IT standards [19]. For example, a North American utility company addressed fragmented data ownership by piloting data lineage projects. This effort closed key gaps for 20 critical use cases, leading to a 20%-25% efficiency gain in the first year and recovering $10 million from billing discrepancies [20].

Consistency is key when scaling. Using a unified semantic layer ensures that metrics are defined uniformly across teams, preventing inconsistencies that could erode trust in AI insights. According to Gartner, by 2027, 60% of organizations are expected to fall short of their AI goals due to weak governance frameworks [3].

Another proven strategy is the "lighthouse project" approach: develop a high-impact project within one business unit, prove its value, and use it as a model for scaling across the organization [19] [1]. For example, a North American investment bank used this method to develop AI tools within a specific unit. After these projects succeeded, the bank secured centralized funding to expand similar tools across other units, reducing duplication [19].

Lastly, broad access to AI tools is essential, but it must be paired with safeguards to prevent data leaks and security threats [21]. Removing IT bottlenecks allows frontline teams to experiment with AI tools in real time, speeding up innovation while maintaining control. Ultimately, successful organizations embed AI analytics as a strategic capability across the business, rather than treating it as a one-off IT initiative.

Conclusion

AI is revolutionizing data analytics, turning it from a reactive process into a forward-looking, predictive tool that enables real-time decision-making. By automating tedious tasks like data cleaning and identifying patterns, AI allows organizations to focus on achieving strategic goals. As Mike Sargo, Chief Data Officer & Co-Founder at Data Ideology, explains:

"The future of data analytics is not just about analyzing what has happened but about anticipating what will happen next, and AI is the key to unlocking that future" [23].

The numbers back this up. Today, 88% of organizations use AI in at least one function [24], and AI is expected to contribute up to $4.4 trillion annually in global productivity gains [25]. Companies that view AI as a strategic asset, rather than just a tool to boost efficiency, are reaping the rewards. The path to success involves focusing on impactful use cases, ensuring robust data governance, and embedding AI-driven insights into daily operations.

Incremental progress is the way forward. Organizations that start small with targeted, high-value pilots and prioritize clean, AI-ready data often see a 2.5x return on investment [25]. Gradual steps - such as proving value through initial pilots, building trust with transparent governance, and scaling AI thoughtfully - lay the foundation for long-term success. Bryce Hall, Associate Partner at McKinsey, emphasizes this point:

"AI is rarely a stand-alone solution. Instead, companies capture value when they effectively enable employees with real-world domain experience to interact with AI solutions at the right points" [24].

This evolution highlights a shift toward an AI-driven future in analytics.

The transition from traditional business intelligence to AI-powered analytics isn't just about speeding up dashboards. It's about expanding access to actionable insights and moving from looking backward to anticipating what’s ahead. Organizations that embrace AI today are equipping themselves to make faster, smarter decisions consistently.

FAQs

How does AI make predictive analytics more accurate?

AI takes predictive analytics to the next level by quickly processing massive, complex datasets and spotting patterns that traditional methods might overlook. Machine learning models play a key role here - they continuously learn and adjust, fine-tuning predictions over time to deliver increasingly reliable outcomes.

Beyond that, AI simplifies time-consuming tasks like data preparation, cleaning, and modeling. By automating these processes, it minimizes human error and ensures consistent, high-quality data inputs. This efficiency helps organizations identify trends and relationships much faster, enabling more precise forecasts and smarter, data-driven decisions. With AI in the mix, businesses gain insights that are not only actionable but also highly dependable.

How does natural language processing (NLP) make data analytics easier to use?

Natural language processing (NLP) makes working with data much simpler by letting people use everyday language to interact with it. Instead of needing to know technical skills like SQL, users can just ask questions in plain English and get straightforward, useful answers. This makes diving into and understanding complex datasets a lot easier.

NLP works by converting natural language into structured queries, breaking down barriers to data analysis. This means users across all levels - whether they’re business leaders or team members - can quickly uncover patterns and make well-informed decisions without needing help from technical experts. With NLP-powered AI tools, organizations can transform their data into actionable insights faster and with less hassle.

How can businesses ensure trust and proper governance in AI-powered analytics?

To earn trust and uphold strong governance in AI-driven analytics, businesses need to focus on maintaining top-notch data quality, defining clear ownership, and enforcing solid security protocols. Viewing data as a strategic asset is key - this involves creating reusable processes, adhering to regulations, and protecting the integrity of the data.

It's also crucial for organizations to emphasize transparency and ethical behavior by implementing policies that address data privacy, security, and the ethical use of AI. Consistently evaluating data quality and the performance of AI models can help tackle issues like bias and inaccuracies, building trust in AI-powered insights. By following these practices, companies can tap into AI's potential while ensuring decisions are both reliable and trustworthy.