The Role of Machine Learning in Modern Data Analytics

Business Intelligence

Jan 27, 2026

How machine learning automates anomaly detection, customer segmentation, and forecasting, and how platforms and MLOps scale explainable analytics.

Machine learning (ML) is transforming data analytics by automating complex tasks, identifying patterns, and predicting outcomes with precision. It enables businesses to analyze massive datasets efficiently, moving beyond traditional methods to deliver actionable insights. Here's how ML is reshaping analytics:

Anomaly Detection: Tools like Isolation Forest flag outliers in large datasets, helping industries detect fraud, system issues, or quality problems.

Customer Segmentation: ML clusters customers based on behavior, enabling targeted marketing and reducing bias in analysis.

Forecasting: Advanced models improve prediction accuracy by processing nonlinear patterns and integrating diverse data sources.

Platforms like Querio simplify ML adoption by integrating natural language queries, automating workflows, and ensuring consistency through semantic layers. This makes ML accessible to teams without coding expertise, streamlining analytics and decision-making across organizations.

Watch Me Do a Full Data Analytics Project with Machine Learning

Machine Learning Basics for Data Analytics

Three Types of Machine Learning in Data Analytics: Supervised, Unsupervised, and Reinforcement Learning

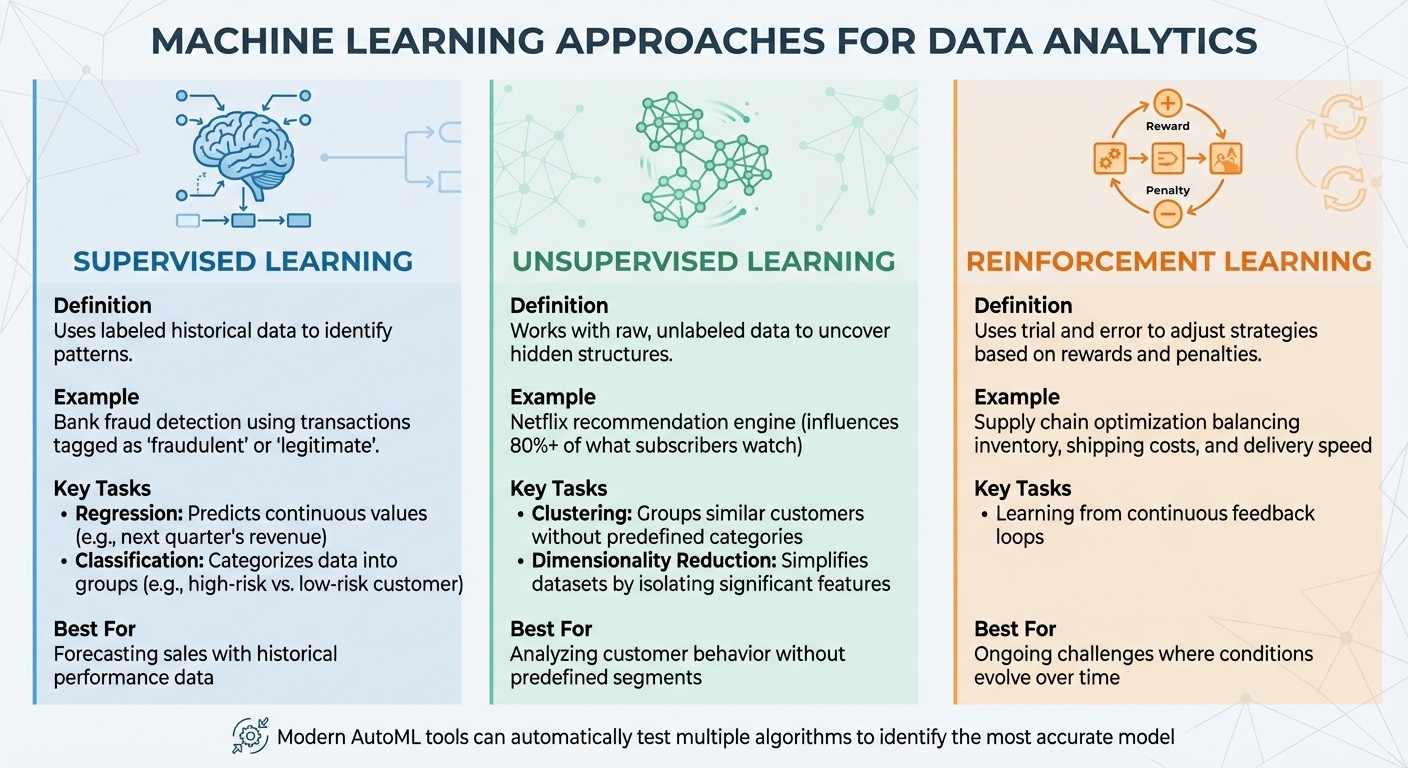

Machine learning revolves around three main approaches, each tailored to tackle specific analytics challenges. Supervised learning relies on labeled historical data to identify patterns. For example, a bank might train a fraud detection model using thousands of transactions tagged as either "fraudulent" or "legitimate." The model learns from these examples and applies that knowledge to flag suspicious new transactions. This method supports two key tasks: regression, which predicts continuous values like next quarter's revenue, and classification, which categorizes data into groups like "high-risk customer" or "low-risk customer" [4][6].

Unsupervised learning, on the other hand, works with raw, unlabeled data, uncovering hidden structures without explicit guidance. Instead of being told what to find, the algorithm identifies natural groupings and patterns. Netflix, for instance, uses this technique to power its recommendation engine, influencing more than 80% of what subscribers watch [6]. In business analytics, unsupervised methods such as clustering group similar customers together without predefined categories, while dimensionality reduction simplifies complex datasets by isolating the most significant features.

Reinforcement learning takes a different approach, using trial and error to adjust strategies based on rewards and penalties [5][6]. This method is ideal for optimizing decisions over time. For example, in supply chain management, reinforcement learning helps balance inventory levels, shipping costs, and delivery speed by learning from continuous feedback loops.

Selecting the right approach depends on your business needs and the data at hand. If you're forecasting sales with years of historical performance data, supervised learning is a natural fit. If you're analyzing customer behavior without predefined segments, unsupervised learning can uncover patterns you might not expect. For ongoing challenges where conditions evolve, reinforcement learning adapts and improves with every decision cycle.

Modern platforms now offer AutoML tools that automatically test multiple algorithms to identify the most accurate model [2][1]. When paired with platforms like Querio, these models can turn raw data into actionable insights. The key is to start with a well-defined problem - whether that's reducing customer churn, detecting anomalies, or optimizing marketing spend - and then choose the machine learning approach that aligns with your goals and available data.

These foundational machine learning techniques are the backbone of advanced analytics, driving insights that inform strategic decisions. With these basics laid out, the next section will delve into how these methods streamline analytics workflows.

How Machine Learning Improves Analytics Workflows

Machine learning (ML) is reshaping analytics workflows by constantly monitoring data, uncovering patterns, and spotting anomalies in real-time. This allows teams to act quickly and base decisions on actionable insights derived from data. Here’s a closer look at how ML is transforming key analytics tasks.

Detecting Anomalies in Large Datasets

Anomaly detection is one area where ML truly shines. Unlike traditional systems that rely on manually set thresholds, ML models like Isolation Forest and Local Outlier Factor can process millions of data points to identify complex outliers [7].

"Analytics from machine learning helps identify outliers or trends in the data that otherwise might not be found - if you didn't think to look for something, you would never find it." - Barry Mostert, Senior Director of Product Marketing, Oracle Analytics [2]

For example, financial institutions use supervised models such as Random Forests and Deep Neural Networks to detect credit card fraud by analyzing historical labeled data [10]. SaaS companies, on the other hand, apply unsupervised techniques like Autoencoders to catch system performance issues before they affect users [8]. Similarly, e-commerce platforms monitor product return rates to detect quality problems early, using sudden spikes as a signal to investigate [8].

To ensure accurate anomaly detection, data preparation is critical. High-dimensional datasets often require specialized algorithms designed to isolate anomalies effectively [7]. With these capabilities, organizations can address potential issues before they escalate, minimizing risks and disruptions.

Automating Customer Segmentation

Traditional customer segmentation often relies on predefined categories, which can miss subtle behavioral patterns. ML changes this by clustering data from CRM systems, web logs, and transaction records to uncover hidden customer groups. Through feature engineering, ML combines related data points - like turning individual page visits into daily visit totals or merging fields such as role and industry to create stronger predictive inputs [9].

Once segments are identified, supervised learning can build models to predict customer behavior. For instance, these models can forecast which customers are likely to churn or upgrade, enabling marketing teams to craft personalized campaigns for specific groups [10]. Unlike manual methods, ML-driven segmentation reduces bias by relying on data rather than assumptions. Using a feature store as a central repository ensures consistency in both training and production, while monitoring for concept drift keeps segmentations accurate over time [11]. This approach empowers businesses to implement proactive strategies in marketing and customer service.

Improving Forecasting Accuracy

Traditional forecasting methods often struggle with nonlinear patterns or hidden correlations in large datasets. ML models, however, can integrate diverse data sources to deliver more precise predictions [1][12].

"Machine learning will transform finance, making finance operations more effective and driving transformation that will allow employees to focus on value-adding activities such as enhancing their capabilities in customer experience and delivering better results to their internal and external customers." - Shawn Seasongood, Managing Director, Protiviti [12]

Modern platforms simplify forecasting with AI tools like AutoML that test multiple algorithms to identify the most accurate model for a dataset, making advanced forecasting accessible with just a few clicks [1]. For businesses looking to scale, MLOps pipelines automate the entire process - from data extraction to model retraining. Continuous validation through techniques like A/B testing or canary deployments ensures forecasts remain reliable as conditions evolve. Additionally, automated monitoring can trigger retraining when model performance declines or data patterns shift significantly [11].

Using Machine Learning with Querio

Querio brings together SQL clients, Python notebooks, and BI tools into a single platform, allowing machine learning workflows to operate directly on live warehouse data [16]. This setup eliminates the hassle of switching between multiple tools or exporting data for analysis. By reducing manual processes and keeping models in sync with current business definitions, Querio simplifies the entire workflow - from connecting to data to generating insights.

Connecting to Your Data Warehouse

Querio establishes direct connections to major cloud data warehouses like Snowflake, BigQuery, and Redshift using encrypted, read-only credentials [16]. This live connection ensures that machine learning models work with up-to-date, governed data - no need for extractions or duplicates. For Snowflake users, the integration offers advanced capabilities, including the Feature Store for point-in-time accuracy and the Model Registry for tracking versions and lineage [13][14]. Analysts can also use built-in automated ML functions to handle tasks like forecasting, anomaly detection, and classification - all within standard SQL [15].

Generating SQL and Python with AI Agents

Querio leverages AI agents to convert natural language prompts into accurate SQL and Python code [16]. These agents follow a multi-step process, including classification and feature extraction, to ensure precision. An error-correction loop checks the generated code against the warehouse's compiler, minimizing inaccuracies and reducing errors [17][18]. Studies show that this method is about 14% more accurate than traditional text-to-SQL tools and nearly twice as effective as single-shot SQL generation using state-of-the-art models like GPT-4o [18]. This capability allows analysts to build machine learning pipelines more efficiently without starting queries from scratch.

Maintaining Consistency with the Semantic Layer

Querio’s shared semantic layer ensures machine learning models adhere to the same business logic used in standard reporting. It bridges the gap between technical schemas and business users by incorporating descriptive names, synonyms, and predefined metrics [18]. Verified queries - SQL examples approved by humans - can also be added to serve as a "ground truth" for AI agents, improving the reliability of generated responses [18].

"An LLM alone is like a super-smart analyst who is new to your data organization - they lack the semantic context about the nuances of your data." - Snowflake Cortex Analyst Team [18]

Scaling Machine Learning in Business Intelligence

Integrating machine learning (ML) into tools like Querio is just the beginning; scaling these models for real-world use demands a solid foundation of automation, transparency, and efficient distribution. Moving from isolated ML experiments to production-ready business intelligence (BI) workflows involves more than just building accurate models. To handle the complexity of real-world deployments, businesses need automated workflows, clear and understandable outputs, and centralized oversight. It’s worth noting that ML code makes up only a small part of the overall system. To succeed, organizations must invest in infrastructure that supports data validation, feature management, and ongoing monitoring [19].

Automating Data Preparation

Manual processes like cleaning data and engineering features often slow down ML projects, especially when scaling across multiple use cases. Automated ML pipelines take care of the entire process - from collecting and validating data to feature extraction and training - streamlining efforts and eliminating repetitive tasks [20][21]. A centralized Feature Store plays a crucial role here by allowing teams to reuse features and avoid training-serving mismatches, a key factor in scaling ML pipelines effectively [19][13]. Additionally, automated data validation tools can identify schema errors or shifts in data patterns early, preventing issues that might lead to model degradation in production [19].

Organizations that embrace MLOps maturity progress through different stages - from manual workflows (Level 0) to automated pipelines (Level 1), and eventually to fully automated CI/CD systems that enable frequent and reliable model updates [19]. Tools like BigQuery ML further streamline the process by enabling model execution directly within the data warehouse, reducing the need for data transfers and simplifying operations [3].

Once automation is in place, the next step is to establish trust in the models, which is where explainable AI becomes essential.

Building Trust with Explainable AI

Machine learning models can lose credibility if stakeholders don’t understand how predictions are made. Explainability - knowing what a model is saying and the data behind its conclusions - is critical for building trust [1]. For example, if an AI model can explain which specific sales reports influenced a forecast, users are more likely to trust its output [1].

"When the output of an AI model is explainable, we understand what it's telling us and, at a high level, where the answer came from." - Michael Chen, Senior Writer, Oracle [1]

Explainability also helps organizations address model drift and bias as data environments evolve. Techniques like Shapley values provide mathematical insights into individual predictions, while golden datasets - ideal data samples - serve as benchmarks for identifying inconsistencies between training and production data [13][22]. Tracking ML lineage, which maps the journey from raw data to final predictions, ensures compliance, reproducibility, and easier debugging [13].

With trust established, the focus shifts to sharing these insights effectively across teams and applications.

Distributing Insights with Querio Dashboards

Once ML models are validated, the next challenge is distributing their insights across departments and customer-facing platforms. Querio Dashboards simplify this by turning analyses into live, recurring reports that pull directly from warehouse data. This eliminates the need for exporting data or duplicating tools. Because these dashboards rely on the same semantic layer that governs AI-generated queries, they ensure consistency between machine learning insights and standard reporting formats.

For external applications, Querio’s embedded analytics features allow businesses to integrate governed analytics logic into their customer-facing tools via APIs or iframes. This approach ensures that sensitive data is only accessible to authorized users, thanks to role-based access controls and SOC 2 Type II compliance. By leveraging these capabilities, organizations can scale machine learning insights without creating data silos or duplicating governance frameworks across different platforms.

Conclusion

Machine learning is transforming the way we approach data analytics by automating tasks like anomaly detection, customer segmentation, and forecasting. These capabilities uncover patterns and relationships that traditional analytics might miss. Barry Mostert, Senior Director of Product Marketing at Oracle Analytics, highlights this advantage:

"ML augments traditional analytics by identifying patterns, trends, and correlations that would otherwise have gone unnoticed by traditional analytics alone" [2].

However, the real challenge isn’t just building machine learning models - it’s integrating them into dependable, scalable systems [11]. Without automated data preparation, clear and explainable outputs, and consistent insight sharing, these projects often fail to reach their full potential.

This is where Querio’s approach shines. Its AI agents can generate SQL and Python code from plain English questions, making it easier for data analysts to interact with complex systems. The semantic layer ensures that insights - whether from dashboards, ad-hoc queries, or embedded applications - align with consistent business definitions. This setup empowers analysts to build and run models using tools they already know, eliminating the need for deep programming expertise [3][2]. By combining these features, Querio provides a framework for delivering reliable, scalable analytics that can benefit the entire organization.

FAQs

How does machine learning make anomaly detection in large datasets more effective?

Machine learning has transformed anomaly detection by making it possible to automatically spot unusual data points that stray from expected patterns. By examining historical data, these systems develop an understanding of what "normal" looks like and keep a constant watch on new data to pinpoint outliers. For example, machine learning models can identify anomalies in real time, such as technical malfunctions or signs of fraud, all without needing pre-labeled data.

What sets machine learning apart from traditional rule-based approaches is its ability to adapt to changing data and handle large, complex datasets effortlessly. This capability enables organizations to uncover subtle patterns, react swiftly to critical situations like cybersecurity breaches, and significantly cut down on manual monitoring. Its continuous learning process makes it an indispensable tool for improving accuracy and streamlining operations in today’s data-driven world.

How does reinforcement learning improve supply chain management?

Reinforcement learning (RL) brings a major shift to supply chain management by empowering systems to learn and adapt through experience. Instead of relying on fixed rules, RL leverages trial-and-error to fine-tune strategies, making it possible for supply chains to adjust dynamically to real-time changes and uncertainties.

Take inventory management as an example - RL can help maintain optimal stock levels, avoid overstocking, and prevent shortages. It can also streamline procurement and pinpoint the most cost-efficient logistics routes. The result? Fewer delays, lower costs, and improved operational efficiency. By adopting systems that continuously learn and improve, businesses can create supply chains that are more agile and better equipped to handle the challenges of today’s fast-moving, complex markets.

How does Querio make it easier to integrate machine learning into business intelligence workflows?

Querio simplifies the process of embedding machine learning into business intelligence workflows. By connecting directly to live data sources, it removes the hassle of duplicating data or dealing with complicated configurations. Its user-friendly interface makes it easy for anyone - whether they're tech-savvy or not - to ask questions using natural language and get meaningful insights.

Packed with AI-driven tools like predictive analytics, anomaly detection, and automated reporting, Querio empowers businesses to spot trends, detect outliers, and make faster, more informed decisions. Plus, with secure data connections and governance features, it ensures data security and compliance, helping organizations adopt advanced analytics without adding extra strain on IT teams.