AI Insight Generation: How It Works

Business Intelligence

Jan 14, 2026

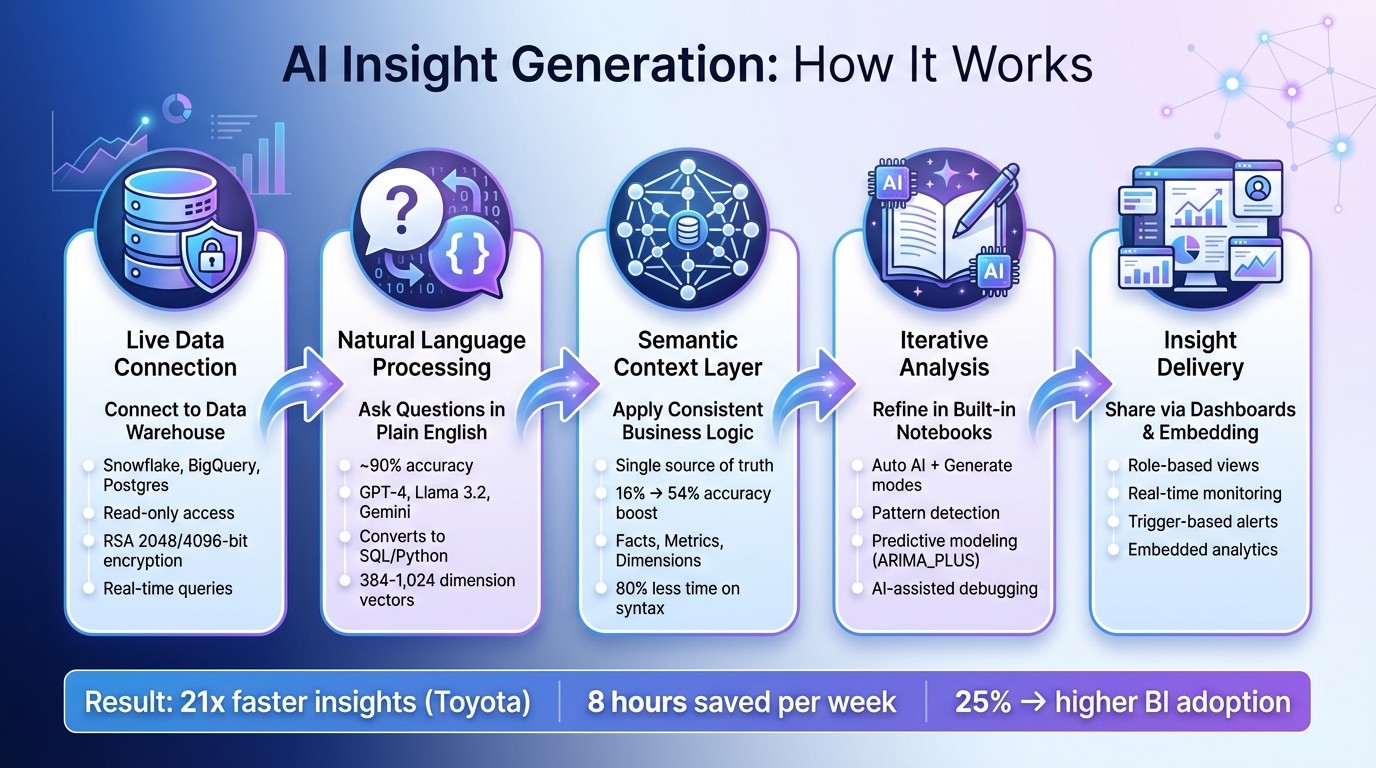

AI turns plain language into accurate SQL/Python queries against live warehouses, standardizes metrics with a semantic layer, and delivers real-time insights.

AI-powered tools like Querio are transforming how businesses analyze data. By connecting directly to live data warehouses (e.g., Snowflake, BigQuery), these platforms let you ask questions in plain English and get accurate SQL or Python-based answers instantly. Here's what makes this approach stand out:

Natural Language Queries (NLQ): Converts everyday language into SQL or Python, achieving ~90% accuracy.

Live Data Access: Uses read-only credentials to query real-time data securely, avoiding outdated snapshots.

Semantic Context Layer: Ensures consistent metrics and terminology across teams, eliminating miscommunication.

Built-in Notebooks: Enables iterative analysis, debugging, and predictive modeling without exporting data.

Dynamic Dashboards: Delivers real-time, role-specific insights directly to stakeholders or embedded in apps.

This shift simplifies data analysis, making it faster and accessible to non-technical users while maintaining precision, security, and governance.

How AI-Powered Insight Generation Works: From Query to Dashboard

The Impact of Generative AI on Business Intelligence

Connecting to Live Data Warehouses

Querio connects directly to your existing data warehouse - whether it's Snowflake, BigQuery, or Postgres - using read-only access. This ensures that your data stays within your infrastructure at all times. Instead of duplicating tables or exporting CSV files, Querio queries your warehouse in real time using encrypted, read-only credentials. Security is a top priority, with RSA key-pair encryption (2048 or 4096-bit) and TLS 1.3 protecting all data in transit. This setup ensures every query is secure and auditable.

Working with live data means you get up-to-the-minute accuracy. Unlike batch processes that can delay reporting by hours or even days, Querio allows you to analyze data as soon as it lands in your warehouse. There’s no risk of outdated information, no sync errors, and no scattered copies in spreadsheets or BI tools. By keeping the source of truth centralized, governance remains intact and streamlined.

Security is built into every layer of Querio's architecture. IP whitelisting and SSH tunneling allow the platform to navigate firewalls and private networks without exposing your database to the public internet. Credentials and metadata are stored securely with AES-256 encryption. Since the connection is strictly read-only, Querio cannot modify, delete, or corrupt your data. This gives data teams peace of mind, knowing that analytics won’t interfere with production systems.

By eliminating the need for data copies, maintenance becomes simpler, freeing up teams to focus on meaningful analysis. Direct queries to the warehouse remove the need for time-consuming data preparation and syncing. This means more time spent on generating insights and less on managing workflows.

This live connection approach not only ensures speed but also builds trust in your analytics. When your platform accesses the same data as your warehouse, in real time, you get reliable answers you can act on immediately. Plus, this foundation paves the way for deeper analysis using Querio's built-in notebooks.

Natural Language to Code Translation

Querio's AI takes plain English instructions and turns them into actual SQL or Python code, all while making the process completely transparent. You can review the generated code to see exactly how it works.

The process begins with metadata contextualization. The AI gathers details about your database - like schemas, tables, columns, primary keys, and foreign keys. This step ensures the system understands how your natural language terms relate to the actual structure of your data. For instance, if you ask about "customer lifetime value", the AI uses table and column descriptions to pinpoint the right fields and calculations.

To handle the translation, Querio relies on a mix of advanced language models, including GPT-4, Llama 3.2, and Gemini. These models tailor the code to match your database's specific dialect and structure. Techniques like few-shot learning - where the system learns from examples of questions and their corresponding queries - help refine accuracy, especially for handling complex or niche logic.

How Natural Language Processing (NLP) Works

Querio uses cutting-edge NLP techniques to connect everyday language with the technical requirements of database queries.

The system interprets your questions by turning them into numerical vectors that capture their underlying meaning. Embedding models then convert these phrases into arrays - usually with 384 or 1,024 dimensions - which enable the AI to perform semantic searches across your database. This allows it to locate relevant tables and columns, even if you don’t use their exact technical names.

A key component is the Schema Manager, which uses vector search to determine which tables are relevant to your request. This is especially useful in large databases with hundreds of tables, ensuring the AI focuses on the correct data sources. Additionally, the platform tracks conversational history, so follow-up queries retain context from earlier interactions, saving you from repeating details.

Ensuring Accuracy in Query Results

Querio prioritizes accuracy by emphasizing inspectability and validation. Every query it generates undergoes syntax checks before execution. If an error arises, the system identifies the issue and suggests potential fixes. You also have the option to review, edit, and refine the code before running it.

For critical business decisions, Querio supports human-in-the-loop verification. This means you can approve queries before they execute, ensuring they meet your expectations and organizational standards. You can even set custom rules - like requiring aggregators with GROUP BY - to align the outputs with your coding practices and business logic.

To further enhance precision, it’s helpful to include detailed column descriptions in your database schema. These descriptions help the AI resolve ambiguities and produce more accurate queries. For complex metrics like "gross margin" or "churn rate", providing the exact calculation logic in your prompt can guide the system toward the correct interpretation. Since Querio operates with read-only access, there’s no risk of accidental data changes - it can only retrieve information, not modify it.

Semantic Context Layer for Consistent Metrics

Querio's semantic context layer acts as a bridge between raw data and business users, translating complex technical terms (like amt_ttl_pre_dsc) into clear, understandable business language such as "Gross Revenue" [6]. By defining metrics, business rules, and table relationships once, this layer ensures every query adheres to the same logic [5][6]. It forms a foundation that supports standardized and accessible insights throughout the organization.

This layer is built on three core components: Facts (row-level data), Metrics (aggregated measures), and Dimensions (categorical attributes) [6]. Whenever you ask Querio a question, the AI uses these predefined definitions to maintain consistent calculations, often leveraging the synergy between a metrics layer and semantic layer. For instance, if "Net Revenue" is defined as SUM(gross_revenue * (1 - discount)), that exact formula is applied across all reports, dashboards, and analytics views [6].

The impact of semantic layers is significant. Research indicates they can boost AI accuracy from 16% to 54% [7]. In 2024, Toyota North America adopted a semantic layer, enabling its 35+ companies to uncover insights 21 times faster while cutting infrastructure costs [7]. Similarly, Tyson Foods leveraged this approach to empower its 144,000 employees to access data across platforms like Google BigQuery, Hadoop, and AWS Redshift, improving their responsiveness to supply chain fluctuations [7].

Defining Business Metrics Once

The semantic layer allows data teams to create a single source of truth for every key metric. Instead of multiple versions of metrics like "churn rate" or "customer lifetime value", each metric is defined once with precise logic, filters, and aggregation rules - ensuring consistency across the board [6].

This "Don't Repeat Yourself" principle eliminates errors caused by departments calculating the same metric differently. Updates to a metric's definition are automatically applied across dashboards, reports, and analytics tools, simplifying governance and reducing technical debt [6]. By centralizing business logic, the semantic layer streamlines complex data relationships and ensures everyone works from the same playbook [6].

Standardized metrics not only improve accuracy but also lay the groundwork for better collaboration.

Improving Cross-Team Collaboration

A shared semantic layer fosters a "shared reality of metrics", enabling analysts and non-technical users to derive consistent insights [5]. Business users can ask questions in plain English without needing to know SQL or database structures, while analysts benefit from standardized definitions that save time and reduce confusion.

"With dbt's Semantic Layer, you can resolve the tension between accuracy and flexibility that has hampered analytics tools for years, empowering everybody in your organization to explore a shared reality of metrics." - dbt [5]

Access policies, such as row-level security and column-level masking, are defined once in the semantic layer and applied consistently across all users and tools [8][4]. This ensures teams - from finance to marketing to leadership - rely on the same trusted definitions, improving cross-functional decision-making. With a semantic layer in place, analysts spend 80% less time deciphering raw data syntax and meaning, freeing them to focus on deeper analysis [7].

Iterative Analysis in Built-in Notebooks

Building on Querio's straightforward query generation, the built-in notebook environment takes analysis to the next level by enabling a deeper, step-by-step approach. Once the AI generates initial SQL queries based on your natural language inputs, you can refine and expand on them seamlessly. This workflow blends SQL data retrieval with Python-based modeling and visualization, eliminating the need to export data or switch tools mid-process [9]. Plus, with live data warehouse connectivity, the system ensures secure and traceable operations throughout.

The notebook provides two AI-powered modes to suit different user preferences. In "Auto AI" mode, the system acts as an autonomous assistant, capable of writing, executing, and even self-correcting code [9]. For those who prefer more oversight, "Generate mode" allows users to review and approve suggested code blocks before execution [9]. You can further fine-tune your analysis with iterative commands like "limit to 1,000 results" or "show me customer retention rates by region", and the AI will adapt the queries accordingly [9].

Encounter a coding issue? No problem. The notebook includes AI-assisted debugging, where you can highlight problematic code and use the "Fix with AI" feature to resolve syntax or logic errors in SQL or Python automatically [10]. Additionally, the "Explain" commands break down complex queries into plain language, making it easier to understand their functionality before making edits [10]. This combination of automation and user control ensures your analysis remains both efficient and accurate. These iterative capabilities lay the groundwork for automated pattern detection, which is explored further in the next section.

The environment also supports advanced workflows, such as querying Python DataFrames with SQL syntax and integrating with tools like Vertex AI for MLOps processes [10]. To improve the AI's precision, you can add detailed column descriptions to your schema. This technique, known as "grounding", helps the system identify relevant patterns and generate more accurate insights [3].

Automating Pattern Detection and Anomaly Identification

Querio's AI simplifies the often tedious task of identifying trends and anomalies in your data. By leveraging table metadata, the platform automatically generates natural language questions and corresponding SQL queries, making it easier to analyze unfamiliar datasets [3]. This auto-detection capability also selects relevant data columns when creating visualizations, speeding up the journey from raw data to actionable insights.

For time-series analysis, the notebook comes equipped with machine learning functions like ARIMA_PLUS, which can handle seasonality, holidays, and outliers without requiring external tools [11]. These models learn your data’s normal behavior and flag deviations that might require further attention. Additionally, users can monitor organizational query activity to uncover broader trends and ensure the reliability of generated insights.

To enhance accuracy in pattern detection, it's essential to document metadata and data models within the platform [3]. This creates a single source of truth, providing the AI with the context it needs to minimize errors and improve anomaly detection. Teams that centralize their column definitions, key metrics, and joins report significantly faster reporting - up to 20 times faster - and save as much as 8 hours per week with real-time dashboards that track anomalies dynamically.

Applying Predictive Modeling for Business Decisions

Querio's notebook environment doesn’t stop at identifying trends - it also empowers users to predict future outcomes with built-in modeling tools. Functions like linear regression, K-means clustering, and matrix factorization run directly within your data warehouse, allowing you to develop and test models without moving data or learning new software [11].

This capability is transforming how businesses operate. Instead of reacting to problems, companies are adopting proactive strategies. For example, sentiment analysis applied to customer feedback can highlight service issues or product frustrations in real time, enabling teams to address them before they escalate [12]. Similarly, forecasting churn rates or customer expectations allows businesses to intervene early, protecting both customer satisfaction and revenue.

The iterative nature of the notebook environment makes it easy to refine predictive models. Start with a basic model, assess its performance, and use conversational prompts to guide the AI in improving it. To ensure data integrity, the platform includes guardrails that prevent AI agents from executing "write" queries during autonomous exploration [9]. This safety measure allows teams to experiment with predictive techniques confidently, knowing their source data remains untouched.

Delivering Insights via Dashboards and Embedding

After polishing your analysis in the notebook environment, the next step is sharing those insights with stakeholders. Thanks to Querio's dynamic dashboards and embedded analytics, powered by a live data connection, stakeholders always have access to the most up-to-date information. No need for manual exports, outdated snapshots, or wrestling with version control issues - every view reflects the latest data straight from your live data warehouse.

Querio’s platform also supports role-based dashboards tailored to each audience’s needs [12]. For example, an executive might view high-level KPIs and trend summaries, while a regional manager dives into granular metrics for their territory. Meanwhile, frontline teams can monitor operational impacts in real time. This customization ensures every stakeholder gets exactly what they need without sifting through irrelevant data. Additionally, trigger-based scheduling automates report delivery, sending insights when specific business events occur - ensuring that insights arrive precisely when they’re actionable [14].

Creating Dynamic Dashboards for Stakeholders

Building a dashboard in Querio is seamless, starting with the AI-generated queries already tested in the notebook. The system automatically selects relevant data columns for visualizations, and you can fine-tune charts or tables using simple conversational prompts. Since these dashboards are directly connected to your data warehouse, updates happen instantly and automatically.

Real-time monitoring and alerts transform dashboards into proactive decision-making tools [12]. For instance, if customer sentiment suddenly shifts or sales dip in a critical region, stakeholders are notified immediately. One example: a Development team manager used a dynamic turnover analysis template that triggered after employee exits. The AI identified other employees at high risk of leaving based on patterns like tenure and job role, enabling the manager to take action and improve retention [14].

To prevent "report fatigue", you can apply trigger filters that only send updates when specific conditions are met [14]. This way, stakeholders aren’t overwhelmed by routine updates and can focus on insights requiring immediate attention. Dynamic templates further enhance relevance by automatically adjusting data for each recipient - for instance, a manager sees metrics for their team, not the entire organization [14]. Beyond dashboards, Querio makes it easy to integrate insights directly into customer-facing applications.

Embedding Analytics for End Users

Querio’s embedding capabilities allow you to deliver governed insights directly within your customer-facing applications. This eliminates the need for users to toggle between tools or request tailored reports from your analytics team. Embedded analytics maintain the same semantic context layer and data governance as internal dashboards, ensuring consistency and accuracy across all platforms - essential for delivering reliable insights.

You can choose an embedding method that fits your audience’s needs. Non-technical users, for instance, can simply ask questions in plain English, and the system generates precise SQL queries [4][3][2]. This conversational approach addresses a common challenge in traditional business intelligence tools: low adoption rates, with only about 25% of users reporting regular use [2].

To ensure accuracy in embedded insights, it’s essential to ground AI responses in thorough data profiling and clear table/column descriptions [3]. As Microsoft highlights:

"Model owners need to invest in prepping their data for AI to ensure Copilot understands the unique business context... Without this prep, Copilot can struggle to interpret data correctly" [13].

This preparation is critical for delivering reliable analytics, especially to external users who expect accurate, immediate answers. When data is properly prepared, it minimizes the risk of AI misinterpretations or "hallucinations", ensuring trust and reliability in every insight shared.

Key Takeaways

AI-powered insight generation has made accessing and analyzing data more straightforward by eliminating technical hurdles while ensuring accuracy and compliance. Querio connects directly to live data warehouses like Snowflake, BigQuery, and Postgres, meaning your queries always reflect up-to-the-minute business data - no need for outdated data copies or snapshots.

This robust setup is paired with a highly intuitive interface that transforms how businesses approach analytics. Querio’s natural language interface bridges the gap for non-technical users, addressing the low adoption rate of traditional BI tools (just 25%[2]) by converting plain English into precise SQL or Python queries. With a text-to-SQL accuracy of about 90%[1], users can easily generate the insights they need for critical decisions - no coding skills required.

A semantic context layer ensures that metrics, joins, and business terms are consistent across the board, so everyone from executives to managers sees the same reliable insights. Plus, the built-in notebook allows users to refine queries, identify patterns, and even apply predictive modeling - all within a single, continuous workflow.

Querio also offers dynamic dashboards, scheduled reports, and embedded analytics to deliver real-time insights where they’re needed most. By combining live connections, automated query generation, and a shared context layer, Querio creates a seamless analytics workflow that empowers teams to make fast, informed decisions without compromising on precision.

FAQs

How does Querio protect data when connecting to live data warehouses?

Querio places a strong emphasis on protecting your data when connecting to live data warehouses, employing measures designed to keep your information secure. While the exact details of Querio’s security protocols aren't outlined in the available resources, typical industry practices often involve:

Encrypting data both in transit and at rest to ensure sensitive information remains protected.

Implementing secure authentication methods to verify user identities.

Enforcing strict access controls to block unauthorized access.

For a deeper understanding of Querio’s security framework and specific safeguards, consult their official documentation or contact the Querio support team directly for more detailed information.

How does the semantic context layer ensure consistent metrics in AI insights?

The semantic context layer acts as the backbone for defining business logic, transforming raw data into clear, consistent metrics like revenue, costs, or conversion rates. By standardizing how these metrics are defined and calculated, it eliminates discrepancies across reports and ensures that AI-generated insights are both accurate and aligned with your business goals.

This layer holds critical metadata, including dimensions, hierarchies, and formulas, which AI systems rely on to analyze data and create visualizations. Since it’s shared across the entire analytics platform, any changes - like updating a fiscal year - are instantly reflected in all dashboards and reports. This guarantees a seamless flow of consistent and reliable insights throughout the system.

How does Querio's AI process complex natural language queries?

Querio’s AI takes complex natural language inputs - whether spoken or typed - and converts them into a structured format it can process. Powered by advanced large language models (LLMs), it breaks down user queries to identify intent, key details, and context. Then, it aligns this information with its underlying data structure. This approach enables the system to tackle multi-step questions, apply conditional logic, and perform comparisons, all without requiring users to understand technical query language.

Once the query is translated, the AI executes the necessary commands across both structured and unstructured data sources. It leverages machine learning to detect patterns, trends, and correlations. The results are presented as clear, actionable insights, often paired with visual elements to enhance understanding. By blending natural language processing with real-time analytics, Querio helps users make fast, informed decisions with confidence.