5 Metrics to Test Text-to-SQL Accuracy

Business Intelligence

Oct 5, 2025

Learn essential metrics for evaluating text-to-SQL accuracy to enhance business intelligence workflows and ensure reliable insights.

Text-to-SQL tools are only as good as their accuracy. When businesses rely on these tools to turn natural language into SQL queries, even small errors can lead to costly consequences. Measuring performance is essential to ensure accurate results and maintain trust in business intelligence workflows.

Here are five key metrics to evaluate text-to-SQL systems:

Exact Match Accuracy: Compares generated SQL with a reference query, character by character. It's simple but doesn't account for valid variations in syntax.

Execution Accuracy: Focuses on whether the generated SQL produces the correct results when run, regardless of syntax differences.

Semantic Testing: Evaluates the system's understanding of query intent, ensuring it handles complex logic and varied phrasing accurately.

Functional Correctness: Uses graph-based testing to verify that the query performs the intended operations, even if structured differently.

Speed Metrics: Measures how quickly queries execute, ensuring they work efficiently with large datasets.

Each metric serves a distinct purpose, from ensuring basic functionality to handling complex business questions. By combining these methods, businesses can confidently assess and improve the reliability of text-to-SQL tools. This is particularly critical when deploying a conversational AI data analyst to ensure non-technical users receive valid data.

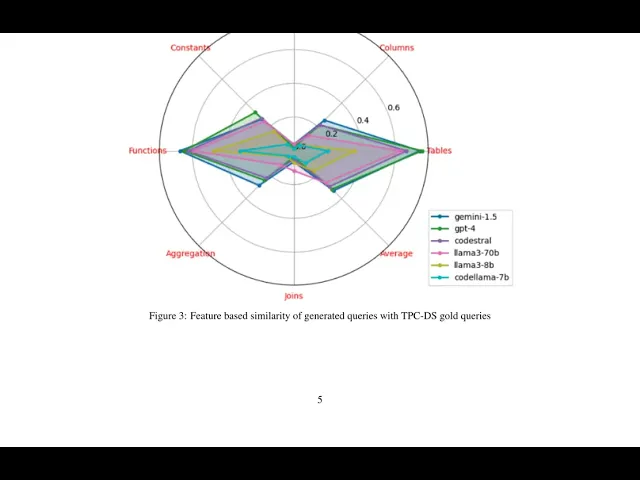

Evaluating LLMs for Text-to-SQL Generation With Complex SQL Workload Limin Ma, Ken Pu, Ying Zhu 4min

Exact Match Accuracy

Exact Match accuracy is one of the simplest ways to evaluate text-to-SQL performance. It works by comparing the generated SQL queries to reference queries character by character, resulting in a clear pass or fail. While this strict approach ensures clarity, it doesn't always align with the needs of real-world business intelligence (BI) applications.

What Exact Match Measures

At its core, Exact Match focuses on a strict, syntax-based comparison. It checks whether the SQL query generated by a text-to-SQL tool matches the reference query exactly, down to every character and symbol.

For example, consider a standardized query like "Show me total sales for Q3 2024." The reference query might be:

For the tool to pass, it must generate this exact query. Even minor differences - such as column ordering, formatting, or an alternative but valid syntax - would result in failure. While this method ensures the generated query adheres to a baseline structure, it doesn't account for the nuances or flexibility often required in BI scenarios.

Benefits and Drawbacks

Benefits:

The biggest strength of Exact Match lies in its clarity. It provides a simple, objective way to verify whether the system produces the expected output. This makes it especially useful for initial validation and regression testing, where consistency is key. Teams can quickly spot deviations in system behavior after updates or changes.

Drawbacks:

However, its binary nature - it's either correct or incorrect - makes it less practical for real-world applications. For instance, a query missing a single comma is treated the same as one that's entirely wrong. Moreover, Exact Match doesn't recognize semantically equivalent queries. Take these two examples:

Both queries yield the same results, but Exact Match would reject one if it doesn't match the reference query exactly. This limitation becomes especially apparent when evaluating text-to-SQL query tools designed to handle varied user inputs and generate flexible SQL solutions. For teams focused on nuanced BI needs, this lack of flexibility can be a significant drawback.

When to Use Exact Match

Exact Match works best in controlled environments with standardized query patterns. It’s a great tool during early development phases when teams need to establish baseline performance. For example, platforms like Querio use Exact Match to verify that query structures remain consistent after updates to systems that connect to live data warehouses like Snowflake, BigQuery, and Postgres.

This metric is also effective for regression testing. Teams can maintain a suite of reference queries to ensure that changes to the text-to-SQL system don’t disrupt existing functionality. However, relying solely on Exact Match can hinder progress, as it doesn’t allow systems to evolve and adapt to new query patterns.

To get a complete picture of performance, Exact Match should be paired with other evaluation methods, such as execution and semantic tests. While it’s a great starting point for identifying basic issues, additional metrics are essential for assessing how well the system handles real-world scenarios, where flexibility and semantic accuracy take precedence over strict syntactic precision.

Execution Accuracy

Execution Accuracy shifts the focus from syntax to outcomes, emphasizing the importance of delivering correct results. Instead of evaluating whether the SQL syntax matches perfectly, this metric compares the outputs of a generated SQL query and a reference query when run against the same database. If the results align, the query is deemed correct - even if the SQL syntax differs significantly.

How Execution Accuracy Works

The idea behind Execution Accuracy is straightforward: a query is correct if it delivers the intended results. To test this, both the generated SQL query and a reference query are executed on the same dataset, and their outputs are compared row by row.

For example, imagine a business user asks, "What were our top 5 products by revenue last quarter?" The reference query might use specific JOINs and aliases, while the generated query could rely on subqueries or a different table sequence. As long as both queries return the same five products with identical revenue figures, Execution Accuracy considers them equivalent.

This approach captures semantic equivalence, which goes beyond syntax. Two queries can look entirely different yet produce the same results. For instance, both SELECT * FROM sales WHERE amount > 1000 and SELECT * FROM sales WHERE 1000 < amount will retrieve the same data. Execution Accuracy uses set-based comparisons, ensuring that rows and columns match while ignoring order unless explicitly specified. This makes it especially useful for business intelligence (BI) applications, where users prioritize accurate insights over the specifics of SQL structure.

Common Testing Problems

Despite its strengths, Execution Accuracy isn't without challenges. Several factors can complicate its reliability:

Data formatting differences: A reference query might return a date as "2024-10-05", while the generated query outputs "Oct 5, 2024." Both are correct, but automated comparison tools may flag them as mismatched.

Floating-point precision: Financial calculations often highlight this issue. For example, $1,234.567 and $1,234.57 are mathematically equivalent but can appear different during automated checks, leading to false negatives.

Incomplete test data: If the test database lacks edge cases - like null values, duplicates, or unusual data types - queries might pass testing but fail in more complex, real-world scenarios.

Performance differences: A generated query might take significantly longer to execute than a reference query, even if it produces the same results. While Execution Accuracy doesn’t measure performance, slow queries can be problematic in live environments.

Database state dependencies: When tests are run on a database undergoing updates, timing differences can cause correct queries to produce inconsistent outputs.

Why It Matters for BI Platforms

For BI platforms that rely on live data querying, Execution Accuracy is the benchmark for success. Business users care about getting accurate charts, reports, and insights - not whether the SQL code is perfectly formatted. A query that’s syntactically flawed but delivers correct results is far more valuable than one that’s technically perfect but returns incorrect data.

Take Querio, for example. When integrated with databases like Snowflake, BigQuery, or Postgres, its natural-language agent must generate SQL that works reliably with live data. If a user asks, "Show me customer churn by region this month", they expect accurate visualizations in seconds, regardless of how the SQL query is structured.

This result-oriented approach is essential for real-time analytics, where datasets are constantly changing. Traditional syntax-based testing often fails in these scenarios, but Execution Accuracy ensures that queries adapt to data variations while maintaining accuracy.

Additionally, Execution Accuracy helps improve text-to-SQL systems over time. By identifying patterns in query failures, development teams can pinpoint the types of business questions that lead to errors. This detailed feedback enables more precise refinements compared to syntax-focused metrics.

Together with Exact Match and semantic testing, Execution Accuracy provides a well-rounded evaluation framework, ensuring that BI workflows deliver both accurate results and functional reliability. For data teams implementing governance layers, it’s a critical tool for validating that contextual improvements lead to better query outcomes.

Semantic Testing with Test Suites

Semantic testing goes beyond surface-level evaluations, diving into whether text-to-SQL systems truly grasp the meaning behind natural language questions. It’s not just about generating functional code - it’s about understanding. Test suites play a key role here, offering structured evaluation frameworks that uncover gaps in reasoning and comprehension.

Understanding Semantic Testing

Semantic testing measures how well a system understands the intent behind user queries. Unlike basic syntax matching or result validation, this approach tests the system’s ability to interpret complex relationships, handle ambiguous language, and maintain logical consistency across various scenarios.

The difference lies in the depth of understanding. For example, when someone asks, "Show me customers who haven't placed orders in the last 90 days", semantic testing ensures the system correctly interprets the negative condition, applies the right time range, and understands the connection between customers and orders. It’s not just about generating a query - it’s about reflecting a true grasp of the underlying business logic.

Test suites evaluate more than just correctness; they assess consistency across variations and performance in edge cases. This approach distinguishes between systems that merely memorize patterns and those capable of genuine comprehension.

In business intelligence, where systems handle diverse and often nuanced questions, semantic accuracy is critical. A system might easily answer, "What’s our monthly revenue?" but falter with, "Which months had revenue below our quarterly average?" The latter requires a deeper understanding of aggregation, comparison, and temporal logic - precisely the kind of insight semantic testing aims to measure. This is where test suites become indispensable for improving accuracy and reliability.

Why Test Suites Work Better

Relying on individual query tests can overlook systematic weaknesses that only emerge through broader evaluations. Test suites address this by grouping related queries to target specific capabilities, revealing performance patterns that isolated tests might miss.

Test suites are particularly effective because they assess:

Varied phrasing: Ensuring the system understands different ways of asking the same question.

Progressive complexity: Starting with straightforward queries and gradually introducing more challenging ones.

Cross-domain validation: Testing the system’s ability to handle queries across different subject areas.

For instance, a customer analysis test suite might start with a simple query like "List all customers", then progress to "Show customers from California", followed by "Display California customers who made purchases last quarter", and finally, "Find California customers whose quarterly spending exceeded their annual average." This gradual increase in complexity highlights where the system’s understanding begins to falter.

Cross-domain validation is another critical aspect. A system might excel at sales-related queries but struggle with inventory-related ones, even if the underlying SQL logic is similar. Test suites expose these domain-specific weaknesses, helping teams determine whether their text-to-SQL tool has broad applicability or is limited to specific use cases.

Industry Standard Benchmarks

Benchmarks and text-to-SQL tool comparisons provide an additional layer of validation for semantic testing. The Spider benchmark is widely recognized as a leading standard for evaluating text-to-SQL systems. With over 10,000 questions spanning 200 databases across various domains, Spider prioritizes semantic understanding over simple syntax matching. Queries are categorized into difficulty levels - Easy, Medium, Hard, and Extra Hard - based on the SQL components involved. While Easy queries might involve basic SELECT statements, Extra Hard ones often require nested subqueries, multiple JOINs, and complex aggregations. This categorization helps teams pinpoint strengths and weaknesses in their systems.

Another key benchmark is WikiSQL, which focuses on single-table queries derived from natural language questions about Wikipedia tables. With over 80,000 examples, WikiSQL is particularly useful for testing how well a system handles diverse topics and table structures.

For platforms like Querio, which connect to enterprise databases such as Snowflake, BigQuery, or Postgres without duplicating data, these benchmarks are invaluable. They ensure that natural-language agents can handle a wide range of business queries. Strong performance on benchmarks like Spider and WikiSQL demonstrates a system’s ability to interpret complex business logic and generate accurate SQL.

Functional Correctness and Speed Metrics

While semantic testing focuses on understanding, functional correctness and speed metrics zero in on whether the generated SQL queries actually work as intended in real-world scenarios. Together, these metrics provide a comprehensive view of performance, ensuring that queries are not only accurate but also efficient and reliable in production.

Graph-Based Function Testing

Graph-based function testing takes SQL evaluation a step further by analyzing queries as computational graphs. In these graphs, each node represents a database operation, and edges illustrate how data flows between those operations.

This approach shines when identifying functionally equivalent queries that may differ in syntax. For instance, a query using a LEFT JOIN followed by a WHERE clause might produce the same results as one using an INNER JOIN, depending on the filtering conditions. Graph-based testing focuses on the logic behind the query, not just its syntax.

The FuncEval methodology is a great example of this approach. It breaks SQL queries into their fundamental components and examines the relationships between them. Instead of requiring an exact syntax match, FuncEval checks whether the generated query performs the same logical operations as the expected result. This method is especially helpful for handling complex queries involving multiple joins, subqueries, or aggregations.

For business intelligence platforms like Querio, which process varied natural-language questions about warehouse data, graph-based testing offers dependable validation. Imagine a user asking, "Show me revenue by region for customers who made repeat purchases." Depending on how the system interprets the table relationships, it might generate different SQL structures. Graph-based testing ensures that regardless of syntax, the query correctly identifies repeat customers and aggregates their revenue by region.

Once functional correctness is verified, the next step is ensuring that the query runs efficiently.

Measuring Query Speed

Execution speed is a critical factor, especially for text-to-SQL systems working with large enterprise datasets. A query that takes 10 minutes to execute - even if correct - won't cut it in fast-paced environments where timely insights drive decisions.

Speed metrics evaluate performance across several dimensions. Execution time measures how long a query takes to complete, while resource utilization monitors CPU and memory usage during processing. These metrics help pinpoint inefficiencies in SQL generation and improve SQL query performance.

For example, a system might create a query with multiple nested subqueries when a single JOIN could achieve the same result. Or it might fail to use database indexes effectively, leading to slower execution. Speed testing identifies these inefficiencies and ensures that queries are optimized for performance.

Scalability is another key consideration. A query that performs well on a small dataset might grind to a halt when applied to millions of records. For business intelligence tools, where users expect instant visualizations, speed metrics ensure that natural-language inputs are translated into SQL queries that run efficiently, even at scale.

Balancing speed with accuracy is no small feat. Sometimes, the simplest SQL translation isn't the fastest. Effective text-to-SQL systems must generate queries that are both logically correct and optimized for performance.

Finally, ensuring that queries execute without errors is just as important as speed.

Checking Query Validity

Query validity ensures that the generated SQL not only looks correct but also executes without errors. While this might sound straightforward, it’s a significant challenge for text-to-SQL systems, especially when dealing with complex database schemas or ambiguous natural-language inputs.

Validity testing goes beyond checking syntax. It verifies that the referenced tables and columns exist in the database, that data types are compatible across operations, and that the query adheres to the specific SQL dialect used by the database. For instance, a query might be valid SQL but fail because it references a column that doesn’t exist or attempts operations with incompatible data types.

Schema validation is particularly important for platforms like Querio, which connect to live warehouse data. When users ask questions about their data, the system must ensure that the generated queries align with the actual database structure. This involves checking table relationships, column constraints, and even access permissions.

Runtime error detection adds another layer of validation. Some queries might pass initial checks but fail during execution due to issues like division by zero, null values, or constraint violations. Comprehensive validity testing simulates these scenarios to catch potential errors before they occur in production.

For business intelligence workflows, query validity directly affects user trust. When natural-language inputs consistently produce executable SQL, users gain confidence in the system’s reliability. On the other hand, frequent query errors can erode trust and discourage adoption, making validity metrics a crucial part of evaluating text-to-SQL systems.

How to Use These Metrics Together

No single metric can fully capture text-to-SQL performance. To get a complete understanding, you need to combine different approaches. Each metric highlights unique strengths and weaknesses, so knowing how they complement each other - and when to prioritize specific ones - can help you align your evaluations with your business intelligence goals.

Using Multiple Metrics at Once

The best results come from combining exact match accuracy, execution accuracy, and semantic testing. Each of these metrics focuses on different aspects of system reliability, and together, they can reveal patterns that might go unnoticed if you only rely on one.

Start with execution accuracy as your foundation. This metric ensures that the system's queries run without errors. After all, if queries fail to execute, users will be frustrated, no matter how well the system performs in other areas. Once you confirm that queries are running correctly, you can layer in exact match accuracy to see how closely the generated SQL adheres to expected syntax.

Semantic testing becomes particularly important when dealing with complex business questions. A query might not meet exact match criteria, but semantic evaluation can confirm that it still produces the correct results through alternative logic. This flexibility is crucial when users ask varied questions about warehouse data, where multiple valid SQL solutions might exist.

You can also add functional correctness metrics for a deeper analysis. These metrics assess whether queries perform the intended operations efficiently. For example, graph-based testing can identify when different SQL structures achieve the same logical outcome, while speed metrics ensure that even correct queries execute quickly enough to be practical.

By combining these metrics, you can uncover insights that individual measurements might miss. For instance, a system might excel in exact match accuracy but struggle with execution speed, revealing that while its queries are syntactically correct, they aren't optimized for performance. On the other hand, high execution accuracy with low exact match scores might suggest the system is finding creative but valid solutions for complex problems.

Choosing Metrics for Your Needs

Once you've defined the metrics, the next step is to determine which ones align best with your use case. Different business scenarios call for different priorities.

For real-time analytics, speed and execution accuracy are essential. Users looking for instant visualizations won't accept slow queries, even if they are semantically correct.

In exploratory data analysis, semantic testing takes precedence over exact match accuracy. Analysts often pose nuanced, complex questions that can be answered in multiple ways. In these cases, the system's ability to interpret intent and deliver correct results matters more than strict adherence to syntax.

For production dashboards and scheduled reports, reliability is key. Execution accuracy and functional correctness should be your top priorities, as these workflows depend on consistent performance across varying data volumes and time periods.

In enterprise environments with strict governance, exact match accuracy might be prioritized to ensure SQL generation adheres to established coding standards. This consistency simplifies auditing and troubleshooting while maintaining uniformity across teams.

Finally, consider the complexity of your data. Simpler datasets with straightforward schemas might only require basic execution accuracy testing. However, for complex enterprise warehouses with intricate joins, nested relationships, and diverse data types, comprehensive semantic testing and graph-based evaluations become essential.

Sharing Results with Your Team

When presenting metrics to your team, focus on translating technical data into clear business insights. Highlight failures for technical teams while emphasizing reliability and speed for business users.

Use dashboard views to track trends over time instead of relying on isolated snapshots. This approach helps teams spot performance patterns tied to specific query types or data volumes. It's also helpful to track metrics separately for different user groups, as power users may have higher accuracy expectations than casual users.

Provide examples to make metrics more relatable. Instead of saying "85% semantic accuracy", explain it as "85 out of 100 business questions were answered correctly, while the remaining 15 required adjustments." This framing helps non-technical stakeholders understand the practical implications of the scores.

Benchmark comparisons can add valuable context. compare your system's performance to previous versions, industry standards, or alternative natural language to SQL tools your team has tested. This helps stakeholders gauge whether the current performance meets business needs or requires improvement.

Lastly, document metric limitations clearly. For example, low exact match accuracy might not indicate poor performance - it could mean the system is finding valid alternative solutions. Similarly, execution accuracy might fluctuate based on database load or query complexity. Setting these expectations ensures your team interprets results accurately and maintains realistic goals for system performance. Together, these insights lay the groundwork for a strong evaluation strategy within your BI workflows.

Key Points About Text-to-SQL Accuracy

Evaluating text-to-SQL accuracy is critical for maintaining reliable business intelligence (BI) workflows. By focusing on multiple measurement methods, you can ensure confidence in your operations. Here's a breakdown of five key metrics that help gauge this accuracy.

Summary of the 5 Metrics

Exact match accuracy: This checks if the queries match the expected syntax perfectly, ensuring consistency across your organization.

Execution accuracy: Focused on error-free query execution, this metric is essential for production environments.

Semantic testing with test suites: By using benchmarks like Spider and WikiSQL, this evaluates whether queries return correct results, even when syntax varies, making it ideal for handling complex business questions.

Functional correctness: Graph-based testing ensures queries perform as intended, especially in large and intricate enterprise data warehouses.

Speed metrics: Measures how quickly queries execute, ensuring they deliver results fast enough for real-time analytics.

Using Accurate Tools in BI Work

Accurate text-to-SQL tools revolutionize BI workflows by turning plain-language questions into actionable insights within seconds. This allows teams to focus on analysis instead of wrestling with SQL syntax. For example, tools that integrate directly with your data warehouse - whether it’s Snowflake, BigQuery, or Postgres - eliminate the need for duplicating data, streamlining operations.

AI-native BI platforms like Querio showcase the power of accurate text-to-SQL translation. By layering essential context - such as joins, metrics, and glossaries - once, these tools ensure consistent accuracy across all queries. Over time, this approach compounds improvements, making workflows more efficient and reliable.

Regular testing and refinement of these metrics help teams maintain accuracy as data scales and questions grow more complex. The result? Faster, more dependable answers that drive smarter, real-world decisions.

FAQs

What’s the difference between Execution Accuracy and Exact Match Accuracy when evaluating text-to-SQL tools?

Execution Accuracy measures whether a SQL query delivers the correct results when run, regardless of how it's written. This approach emphasizes the accuracy of the output over the specific structure or syntax of the query.

In contrast, Exact Match Accuracy demands that the generated SQL query matches the reference query exactly, down to the syntax. While this ensures strict alignment, it can be overly rigid, as functionally identical queries may vary slightly in structure. Both metrics offer important perspectives but are designed to evaluate text-to-SQL tools in distinct ways.

Why is semantic testing important for accurately interpreting business queries in text-to-SQL systems?

Semantic testing is essential for making sure text-to-SQL systems produce SQL queries that accurately reflect the meaning of natural language inputs. This becomes particularly important when dealing with complex business queries that often include subtle terms and intricate relationships.

By prioritizing semantic accuracy, these systems are better equipped to handle different ways of phrasing and deliver results that users can depend on. Adding semantic evaluation ensures the generated SQL stays contextually relevant, aligning closely with the user's intent. This not only builds trust in the insights provided but also makes it easier to integrate these systems into everyday business processes.

Why are Speed Metrics important for real-time text-to-SQL analytics?

Speed metrics play a crucial role in real-time analytics by gauging how swiftly a text-to-SQL tool can convert a natural language query into precise results. In fast-paced business settings, quick access to insights is key for making informed decisions on the fly.

Metrics such as query execution time and response speed are central to this process. They ensure decision-makers can retrieve up-to-date data without unnecessary delays. Improved speed not only boosts operational efficiency but also elevates the user experience in live analytics scenarios.