From SQL to SPARQL: a new bridge for knowledge-driven BI

Business Intelligence

Jun 14, 2025

Explore the differences between SQL and SPARQL, two powerful query languages, and learn how combining them can enhance knowledge-driven business intelligence.

SQL and SPARQL are two powerful query languages, each excelling in different areas of data analysis. SQL is perfect for structured, table-based data, while SPARQL is designed for exploring relationships in graph-based, semantic data. Together, they bridge traditional BI and modern, knowledge-driven insights.

Key Takeaways:

SQL: Best for structured data, relational databases, and tasks like reporting, dashboards, and data transformation.

SPARQL: Ideal for analyzing relationships, linked data, and integrating multiple data sources.

Quick Comparison:

Aspect | SQL | SPARQL |

|---|---|---|

Data Structure | Fixed schema, relational tables | Schema-flexible, RDF graphs |

Query Federation | Limited standard support | Built-in federated querying |

Learning Curve | Familiar and widely known | Steep, requires RDF knowledge |

Performance | Optimized for large datasets | May struggle with large graphs |

Relationship Handling | Implicit through foreign keys | Explicit graph relationships |

SQL remains the go-to for traditional BI, while SPARQL enables deeper, interconnected insights. Businesses combining both can unlock the full potential of their data.

1. SQL

Data Model

SQL operates on a relational database model, organizing data into structured tables. By defining primary and foreign keys, as well as constraints, it ensures clear relationships between data while maintaining integrity.

In essence, data modeling with SQL involves crafting the structure, relationships, and rules for the data within a system [4]. Well-structured SQL models simplify complex data relationships, improve accessibility, and enhance confidence in data quality - key elements for making informed decisions [4].

This structured foundation also sets the stage for SQL's robust querying capabilities.

Query Patterns

SQL excels at combining and filtering data across multiple tables using joins - inner, left, right, and full - to address specific business needs.

The WHERE clause is SQL's go-to tool for filtering results based on defined conditions, streamlining queries by processing only relevant data. For more complex analysis, SQL supports subqueries and Common Table Expressions (CTEs), though joins often deliver better performance.

When working with large datasets, optimizing queries is essential. Indexes on frequently queried columns can significantly speed up performance by avoiding full table scans [5][6]. Tools like EXPLAIN are invaluable for identifying inefficiencies before they impact operations.

Syntax and Structure

SQL’s straightforward syntax makes it approachable for both technical and non-technical users [2]. Core commands such as SELECT, FROM, WHERE, and ORDER BY align closely with how business data is queried, making it easier for newcomers to learn.

To maximize readability and maintainability, it’s important to follow best practices: use clear naming conventions, add comments for complex logic, and apply proper indentation [2]. These habits are particularly useful in collaborative environments, where queries are frequently shared and updated.

This combination of simplicity and structure underscores SQL’s reliability in critical business intelligence (BI) scenarios.

Use Case Suitability

SQL is the backbone of mission-critical applications and traditional BI tasks where consistency and data integrity are non-negotiable [7]. According to StackOverflow's 2021 survey, SQL was the most commonly used database environment and ranked as the third most popular programming language, appearing in nearly 65% of data science job postings [8].

"SQL is foundational in all aspects of a BI implementation." [2] - Nash Bober, Data Leader and Tatted Dad

Leading companies heavily rely on SQL. For example, Walmart uses it to analyze consumer behavior and optimize product placement, while Uber leverages SQL for real-time traffic and demand analysis to adjust pricing [9].

SQL is indispensable for tasks like exploratory data analysis, feature engineering, and dashboard creation. Its ability to handle structured data makes it ideal for building dashboards, generating reports, and executing searches on massive databases [8].

"SQL is the language with which we 'communicate' with practically all relational databases...Mastering SQL will give you a competitive advantage because you won't depend on the BI tool to read the data, and you will be able to 'go straight to the source,' ensuring greater control." [2] - Marcos Eduardo, Data Analyst | Data Engineer

What is SPARQL? SPARQL vs SQL explained SPARQL Protocol

2. SPARQL

Unlike SQL, which relies on fixed tables, SPARQL takes a graph-based approach, allowing it to uncover deeper insights by dynamically linking data.

Data Model

SPARQL operates on a completely different data model compared to SQL. While SQL organizes data into structured tables, SPARQL uses the Resource Description Framework (RDF), which represents information as a graph structure[10]. In RDF, data is broken down into triples - each consisting of a subject, predicate, and object. These triples form a graph where nodes represent entities and edges define the relationships between them. For example, the statement "John works_for Microsoft" illustrates a relationship between the entity "John" and "Microsoft."

This graph-centric model places a stronger emphasis on relationships rather than just discrete data points, setting it apart from SQL's relational approach[10]. Another key difference is RDF's flexibility. Unlike SQL's rigid schema with predefined tables, primary keys, and foreign keys, RDF allows for seamless updates to nodes, edges, and properties without disrupting the structure[10]. Additionally, RDF's web-native design utilizes standardized web identifiers for entities, attributes, and relationships, simplifying the process of integrating data from different sources[1]. This flexibility and adaptability make SPARQL particularly effective for navigating and analyzing complex relationships.

Query Patterns

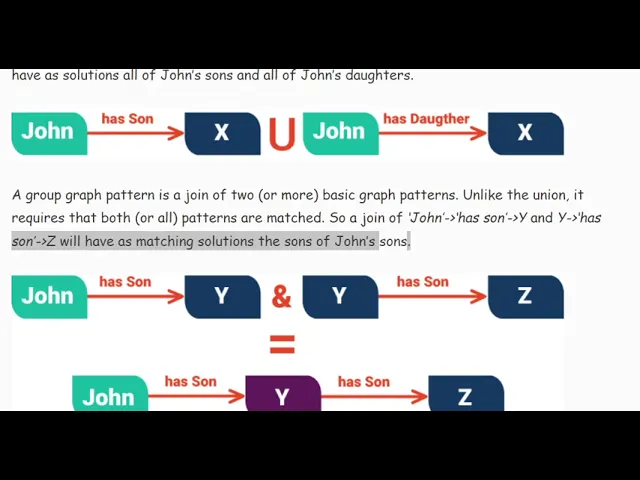

One of SPARQL's standout features is its ability to analyze complex relationships through graph pattern matching[3]. Instead of relying on table joins like SQL, SPARQL queries traverse interconnected triples, making it ideal for exploring semantic data[11]. SPARQL queries are built using triple patterns, where each element (subject, predicate, or object) can be a variable. These patterns are then matched against the RDF graph[3]. Simple patterns can be combined to form more intricate queries, enabling users to explore relationships that might require multiple, complicated SQL joins[3].

SPARQL also supports federated querying, which allows a single query to access multiple data sources simultaneously. This eliminates the need for complex data migration processes and makes it easier for businesses to integrate information from various systems. This capability is especially useful for organizations aiming to enhance their knowledge-driven decision-making processes.

Syntax and Structure

SPARQL's syntax borrows familiar elements from SQL, such as keywords like SELECT, FROM, WHERE, UNION, and GROUP BY, as well as common aggregate functions[1]. However, its structure reflects its graph-based design. A basic SPARQL query looks like this:

SELECT <variable list> WHERE { <graph pattern> }[1]

This contrasts with SQL's format:

SELECT <attribute list> FROM <table list> WHERE <test expression>[1].

Here’s an example that highlights the difference:

SQL Example:

SPARQL Example:

SPARQL uses keywords like OPTIONAL (instead of LEFT OUTER JOIN) to handle missing data and FILTER clauses to impose constraints. Logical operators such as && and || replace SQL's AND and OR[1][13]. While SQL infers relationships through foreign keys, SPARQL explicitly represents these connections in its graph structure[13].

Use Case Suitability

Thanks to its graph-based model and flexible syntax, SPARQL shines in scenarios where understanding relationships and uncovering insights are crucial. It is particularly effective in environments where the data structure isn't fully defined in advance[12]. SPARQL is widely used in semantic web applications and knowledge graphs, where exploring connections between entities is more important than processing large volumes of structured transactions[12].

Its ability to use web identifiers and perform federated queries makes it a powerful tool for integrating data from multiple sources. This is especially beneficial for businesses looking to enhance their decision-making by combining insights from different departments or systems.

SPARQL is also well-suited for exploratory data analysis in fields like life sciences, government, and enterprise knowledge management. These domains often deal with intricate and evolving relationships, and SPARQL's flexibility allows analysts to uncover unexpected patterns and connections. This capability is particularly valuable for best AI business intelligence platforms that require a deeper understanding of data relationships and context.

Advantages and Limitations

When it comes to SQL and SPARQL, each has strengths that shine in specific business intelligence (BI) tasks. But their limitations also become clear when applied outside their ideal scenarios.

SQL: Strengths and Weaknesses

SQL's biggest advantage lies in its widespread adoption. It's the backbone of modern cloud data warehouses like BigQuery, Snowflake, and Amazon Redshift, making it the go-to for scalable data processing. SQL is highly effective for querying, transforming, and aggregating structured data in traditional data warehousing environments. For example, IBM's Big SQL demonstrates this strength by running all 99 TPC-DS queries on a 100 TB scale three times faster than Spark SQL, while using fewer resources - a clear productivity boost [15].

SPARQL: Where It Stands Out

SPARQL, on the other hand, shines when flexibility and data integration are priorities. Its schema-flexible design allows users to focus on what they want to know, not how the data is organized. This makes SPARQL especially suited for exploring diverse data sources [12]. Another major advantage? SPARQL simplifies merging results from multiple sources without the need for complex ETL processes, reducing costs and time. The RDF model, which uses web identifiers, also enables seamless data exchange across platforms, bypassing the mapping headaches often associated with SQL [1]. These features make SPARQL a powerful tool for knowledge-driven BI.

Limitations You Should Know

SPARQL does come with challenges. Its steep learning curve can be a hurdle, as writing SPARQL queries requires a deep understanding of RDF data structures. These structures are more complex and technical compared to SQL's simpler schemas, making SPARQL less intuitive for professionals unfamiliar with graph-based data representation [16]. Performance is another consideration. While SQL has decades of optimization behind it for relational data, SPARQL can struggle with very large datasets, especially when handling complex graph traversals [14].

Aspect | SQL | SPARQL |

|---|---|---|

Data Structure | Fixed schema, relational tables | Schema-flexible, RDF graphs |

Query Federation | Limited standard support | Built-in federated querying |

Learning Curve | Familiar and widely known | Steep, requires RDF knowledge |

Performance | Optimized for large structured data | May face issues with large graphs |

Relationship Handling | Implicit through foreign keys | Explicit graph relationships |

Choosing the Right Tool for the Job

Ultimately, the choice between SQL and SPARQL depends on your BI needs. SQL is the better option for structured data analysis, traditional reporting, and scenarios requiring high performance with large datasets. Its established ecosystem and familiar syntax make it ideal for teams working with relational data.

SPARQL, however, is the tool of choice when your BI strategy involves querying semantic data, analyzing complex relationships, or integrating data from multiple sources. For organizations building knowledge graphs or working with linked data, SPARQL's capabilities are indispensable for uncovering insights that SQL struggles to provide.

As BI platforms evolve, many now support both SQL and SPARQL, allowing businesses to harness the strengths of each depending on the task at hand. This dual approach ensures flexibility and efficiency in tackling a wide range of analytical challenges.

Conclusion

Bringing together the strengths of SQL and SPARQL opens up exciting opportunities for knowledge-driven business intelligence (BI). As Sir Tim Berners-Lee once said, SPARQL enables querying across diverse web data sources, much like SQL does for relational databases [3]. By integrating both, organizations can harness the best of structured data processing and complex relationship analysis.

SQL knowledge graphs act as a virtual semantic layer over existing databases. This allows users to query data with simplified SQL statements that draw on predefined relationships, without requiring an in-depth understanding of the database's structure [19]. Integration platforms further enhance this by connecting the flexible schema of graph data models to the structured relational model needed by BI tools. This means businesses can use familiar tools like Tableau and Power BI with JDBC or ODBC connectors, avoiding the need for extensive retraining of analysts and data scientists [17][18].

The benefits of combining approaches are clear. Organizations using SPARQL effectively have reported up to a 45% improvement in data retrieval speed and a 35% boost in query performance when working with linked data [20].

A practical way to get started is with a hybrid approach. Use SQL knowledge graphs as semantic layers for existing SQL-queryable data, while applying SPARQL for more advanced tasks like federated querying and analyzing complex relationships across multiple sources [19]. A "pay-as-you-go" strategy can be particularly effective - start small with a focused set of business questions, then gradually expand the ontology and mappings as needed [21]. This step-by-step approach taps into SPARQL's flexibility and its ability to integrate diverse data sources.

The future of BI lies in leveraging the strengths of both languages. SQL excels at structured data processing, while SPARQL shines in handling intricate relationships and data integration. As Tzvi Weitzner aptly puts it:

"The SQL Knowledge Graph platform bridges the gap between the 'old' relational world and the 'new' connected world of knowledge representation. For the first time, organizations can enable, visualize and query their relational databases as knowledge graphs" [17].

With 67% of companies that use linked data effectively reporting better decision-making capabilities [20], adopting this integrated approach is a smart move for staying competitive in today's data-driven economy.

FAQs

What makes SPARQL better than SQL for analyzing complex data relationships?

SPARQL stands out for its ability to manage complex relationships in data through advanced pattern matching and querying capabilities that span multiple, distributed sources. While SQL works well with structured relational databases, SPARQL goes a step further by querying semantic data, making it particularly suited for analyzing interconnected datasets.

This capability makes SPARQL a go-to option for tackling intricate queries, especially when working with graph-based data formats or systems spread across different platforms. It’s an essential tool for businesses looking to extract meaningful insights and perform more dynamic and flexible data analysis.

How can businesses combine SQL and SPARQL to improve their business intelligence efforts?

Businesses can leverage the strengths of SQL and SPARQL to build a robust, knowledge-driven business intelligence framework. SQL shines when it comes to managing structured, relational data, while SPARQL is tailored for querying semantic data like linked data and ontologies. Together, they offer a comprehensive way to analyze diverse datasets.

A hybrid approach - using tools like middleware or data virtualization layers - allows seamless querying across both relational and semantic data sources. This integration opens the door to advanced analytics, fuels AI-based insights, and helps organizations make smarter, data-informed decisions. By combining these tools, businesses can gain a unified view of their data, creating opportunities for innovation and strategic advancement.

What are the common challenges teams face when moving from SQL to SPARQL, and how can they address them?

Transitioning from SQL to SPARQL can feel like a steep learning curve because the two operate on fundamentally different ways of organizing and querying data. While SQL relies on relational database tables, SPARQL works with RDF graphs - a structure that can be unfamiliar to many. Tasks like writing queries to explore variable-length paths in graph data can be especially tricky for teams new to SPARQL.

To make this shift more manageable, it's crucial to invest time in training. Building a strong grasp of RDF concepts and SPARQL syntax is a great starting point. Using tools that help translate SQL queries into SPARQL can also reduce complexity during the transition. On top of that, designing semantic data models tailored to your specific query requirements will not only simplify implementation but also lead to better outcomes.